Load balancing is an important feature of cloud infrastructure services. With the ability to rapidly launch VMs, it is important to ensure that all the VMs are evenly utilized. Amazon’s Elastic Load Balancer (ELB) is quite popular for its ability to route the traffic across a set of instances. Azure IaaS also has a load balancer for the same purpose. Google Compute Engine’s load balancer is similar to other services with a few additional capabilities.

GCE load balancer can be used in three different scenarios. The first scenario is the classic network load balancing. The second scenario is based on HTTP for regional load balancing and the final scenario is content-based load balancing that is used to serve static content from different sources that are optimized for specific content. Let’s take a closer look at each of these scenarios.

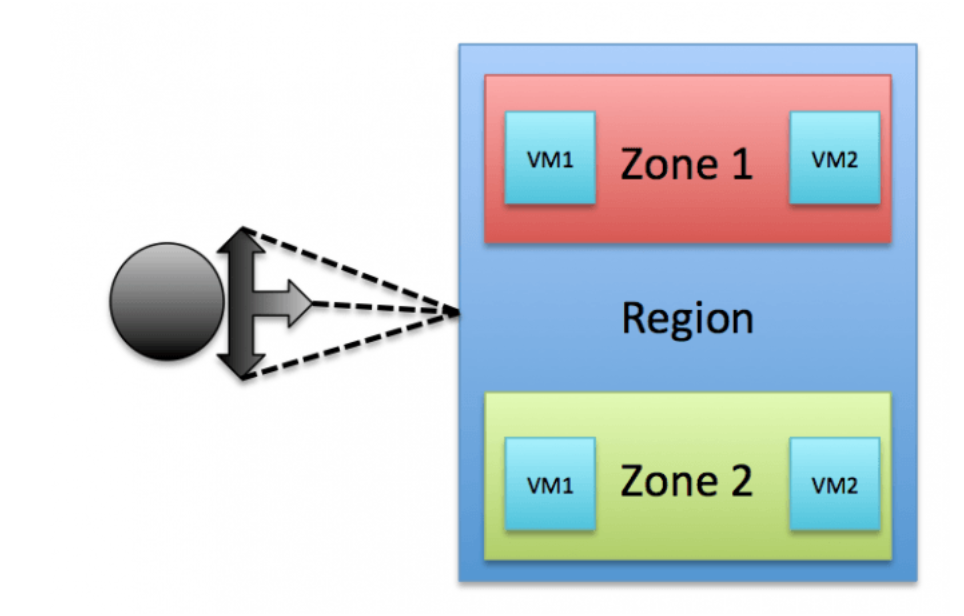

This is the most commonly used load-balancing scheme where a specific port on the load balancer is mapped to a pool of VMs. For example, to load balance the web service, port 80 on the load balancer is mapped to the web servers running in the same region across multiple zones. The pool of VMs participating in the load balancing can be associated with a health check. When a specific web server fails the health check, the load balancer stops routing the traffic to it. This ensures that only healthy web servers get requests. To avoid intrusion, each web server can listen on an obscure port other than 80, which can still be mapped to load balancers port 80.

Cross-region Load Balancing in Google Compute Engine

With this scheme of load balancing, it is possible to set up a geo-routing based load balancing. By deploying VMs running identical services across multiple regions, users can be redirected to the nearest region to avoid network latency. For example, a user visiting from Bangalore (India) will be served from the Asia region while a visitor from Seattle (USA) will be routed to the US-West region. This is different from DNS based routing employed by services like Amazon Route 53. Google uses a combination of a proxy and backend service to determine the destination of the request.

This avoids stale responses that are typically seen in DNS based geo routing. We will discuss the concepts of proxy and backends in the coming articles. This scenario is used in disaster recovery or to achieve increased fault tolerance.

Content-based Load Balancing in Google Compute Engine

Some workloads deal with a lot of static content that needs to be separated from the dynamic web server to avoid the contention. It is common to see an Apache server as a front end for WebSphere or JBoss servers just to deal with the static content. But to balance the load further, administrators may want to set up different servers based on the content type. For example, 3 different web servers to host images, videos and PDFs offer better scalability and availability. GCE offers a mechanism to route the request based on the content type. This is called content-based load balancing. In this scenario, multiple web servers hosting different content types get registered with separate backend services that are responsible for routing the request appropriately.

The objective of this article was to introduce various load balancing schemes supported by Google Compute Engine. We will drill down into each of these scenarios in the future posts.