Fight for Serverless Cloud Domination Continues

In this post, we’ll take a close look at Azure Functions, and we’ll extend it to the analysis that my colleague Alex started earlier in a previous post on Google Cloud Functions vs AWS Lambda. Rather than declaring a “winner,” we will highlight some of the key points of interest in serverless custom code execution as implemented in each cloud platform, taking a closer look at a comparison between Microsoft Azure Functions vs Google Cloud Functions vs AWS Lambda.

Serverless is one of the hottest topics in cloud computing. As we have recently discussed on the blog, serverless doesn’t actually mean that no servers are involved, it just means that developers don’t have to worry about servers or any other infrastructure issues or operational details. Instead, serverless computing allows developers to focus on the code they build to expose different functions.

Among the major cloud platforms, Microsoft Azure’s release of its Azure Functions in May 2016 follows the “serverless revolution” that started with AWS Lambda in 2014, and the release of Google Cloud Functions in February 2016.

Introducing Azure Functions

What Azure was missing prior to 2015 was related to serverless custom code execution, an equivalent of API Apps, like AWS Lambda and Google Cloud Functions. Prior to the official release of Azure Functions, Microsoft Azure already had a variety of serverless services. Its platform as a service (PaaS) features performed some functions for you and allowed you to scale up as you needed. As a developer, you could just size and pay depending on what you need to process, not based on the physical resources you use.

In an application, your code typically does not run continuously if it doesn’t have many data batches to process. Some code runs because something has happened and you need to handle it. Basically, your application can be described in terms of events. Even if you don’t have any events, you can create them with a timer that continuously triggers your code at predefined time intervals, and that can run forever. “Events” mean that you run code when you really need it to run. If you need to generate custom events to trigger your code, this is probably not the correct model to choose.

Even if you need to scale up and you have many requests to handle, for much of the time, at the sub-second interval (milliseconds), your application is just waiting. This is an incredible waste of time and money, as you are traditionally charged by the minute. So, you have a single piece of code that is triggered to handle an event, and you will be charged based on the effective time that it is executed and for the effective resources that it is requested to run (CPU and memory). Sub-second billing is a requirement and also a feature of serverless computing.

This is what Azure Functions is all about: abstraction from the server, event-driven development, and sub-second billing.

Azure Functions is a Worker Role, which is a job that runs in the background. Worker roles in App Services are implemented with WebJobs, a feature of Web Apps, because they run on the same tenant of a deployed Web App. That’s why you can consider Azure Functions like an Azure WebJobs SDK as a Service, because you write a Web Job in a PaaS manner, without having to consider how a WebJob works or how it is structured. Azure Functions obtains the two models that it is able to run from Azure WebJobs: continuous running and triggered running.

Only the triggered running scenario is implemented. Instead, for continuous running tasks, you have to deal with WebJobs directly.

Scalability, availability, and resource limits

Azure Function relies on the Azure App Services infrastructure. Single functions are aggregated in Function Apps, which contain multiple functions that share allocated memory in multiples of 128Mb, up to 1.5Gb.

There are two options for Azure Function hosting: handling resources with an App Service Plan, and a new Consumption Plan.

In the App Service Plan, you choose the size of the dedicated instances underlying the service and the scaling management (manual or autoscaling). The service scales out manually or automatically depending on the options chosen. Autoscaling allocates machines on some metrics by usage, for example, testing CPU load on a five-minute window. The service is priced by the hour and charged by the minute. This is the best choice if you already have an underutilized service plan, or you think you will run your functions almost continuously.

With the new Consumption Plan, you do not have control of the auto scaling host size or the used host size, and instances are added or removed automatically, minimizing the total time for the request to be handled. You rely on a sub-second based billing, using the number of real executions of the Functions app and the duration of an execution (not idle time) multiplied by the reserved memory, measured as Gb per seconds.

Resource limits are not clearly declared and tested at the moment. From various sources, we can find that Azure Functions backs a Function App with a maximum number of 10 instances.

The execution is limited to 5 minutes per invocation. There are interesting discussions in forums where some say that this can be a limitation, especially regarding long execution tasks.

Supported Languages and Dependencies management

On top of the Web Jobs service, Azure has added the new Web Jobs Script runtime. It implements the Azure Functions Host, the support for Dynamic Compilation, and the language abstraction to implement multi-language support. You can use many languages such as F#, Python, Batch, PHP, PowerShell, and new languages will be added in the future. For now, only C# and Node.js are officially supported.

For C#, your code is written in .csx files, which are C# scripting files introduced in a .NET world with the advent of Roslyn technology and Roslyn-based compilers. C# is treated as a dynamic language where code assembly is dynamically generated just before the execution request.

All dependencies are managed with NuGet service. The single function contains a project.json file where all of the required libraries are described and downloaded automatically at first execution.

You can find more details on .NET Core on our blog.

Node.js, JavaScript or its TypeScript language superset are supported natively by App Services from day one.

Deployments and versioning

Azure Functions rely on the App Services infrastructure to implement deployment. It already implements support on various code sources such as BitBucket, Dropbox, GitHub, and Visual Studio Team Services. When this support is configured, it is no longer possible to edit functions from the portal: the code is read-only.

Deployments work on an app basis, not single functions. Therefore, when you deploy, you impact all of your functions. The following structure represents a folder containing all of the code for a Function App. As you can see, the content of wwwroot contains a host.json file that defines global and common parameters for the app and all the single functions.

Different functions in the same app can be implemented in different languages.

wwwroot | - host.json | - mynodefunction | | - function.json | | - index.js | | - node_modules | | | - ... packages ... | | - package.json | - mycsharpfunction | | - function.json | | - run.csx

There is not direct support for versioning in the App, therefore you can only run the last or a specific version of your functions. Versioning is obviously supported from the source code repository such as Git repositories. Other services will probably support versioning in a more structured way, with versioning and aliases for different requirements like testing or staging, as Functions is becoming so pervasive in many different scenarios.

Invocations, events, and logging

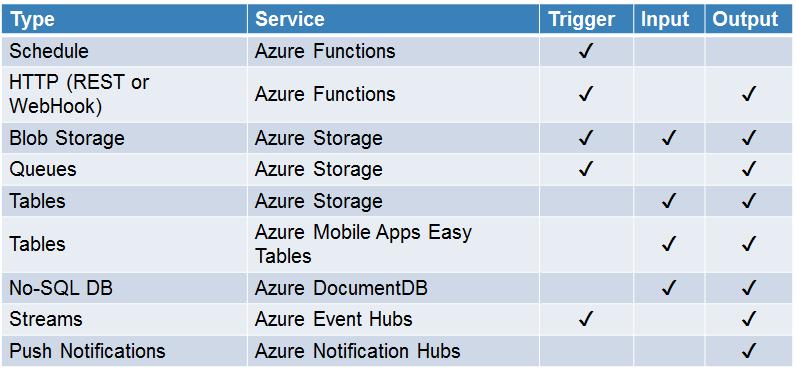

Azure Functions support the event-driven approach. This means that you can trigger a function whenever something interesting happens within the cloud environment. A lot of different services can trigger a function. Many of them come directly from the WebJobs nature of Azure Function. It supports HTTP triggers either from a REST API or from a WebHook.

All triggers are bound to function parameters and natively supported by the environment.

You don’t need manual configuration as the environment helps you with mapping. Every single function describes bindings with parameters in a function.json file where you can configure their name, trigger type direction, and configuration of the sourcing parameter.

{

"bindings": [

{

"authLevel": "function",

"type": "httpTrigger",

"direction": "in",

"name": "req"

},

{

"type": "http",

"direction": "out",

"name": "$return"

}

],

"disabled": false

}

Azure Functions inherits all App Services features for logging. On the remote console, you can inspect diagnostic messages such as debug messages and function timestamps and invocations. You can also connect to a streaming log to see the full content at the HTTP level.

Load testing and statistics

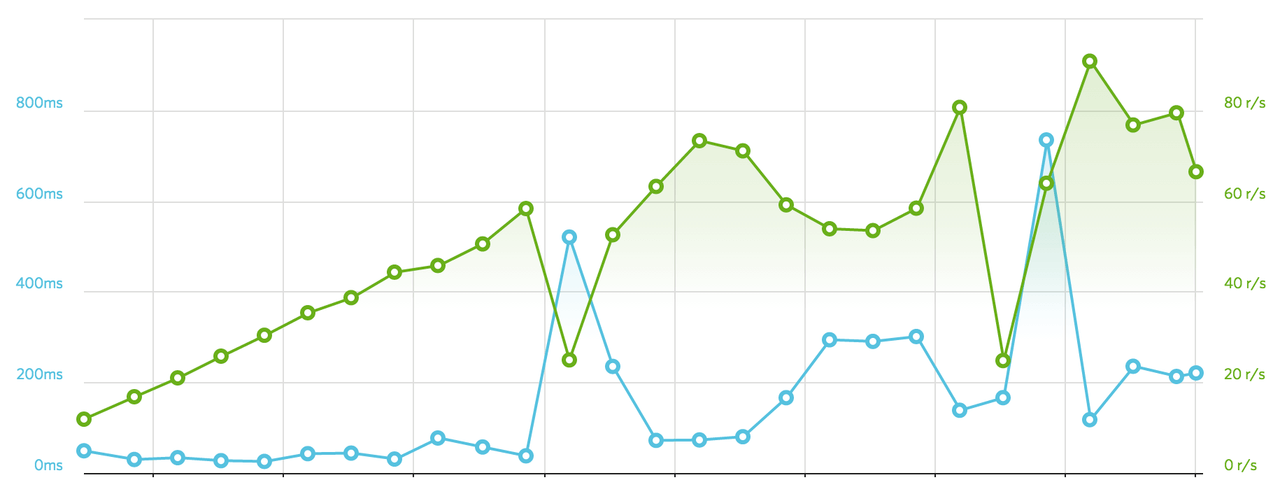

We tested Function App with the same code that we used in our previous post comparing Google Cloud Functions with Lambda (i.e. generating one thousand md5 hashes for each invocation).

We configured a linearly incremental load of 5 minutes, up to about 70 requests per second.

Please note that we have not re-executed the tests on AWS Lambda and Google Cloud Function. Therefore, we cannot say whether these platforms have improved since last year. We can summarize that AWS resulted in an average of 400 to 600ms, substantially independent from the increasing load. Google Cloud Function had a better response time, remaining on an average of 200ms, increasing rapidly after reaching 60 req/s.

Below, we have plotted a chart that shows the average response time and the average number of requests per second. As with the previous test, we have deployed the function in the North Europe region.

The average response time remains under 400ms and no more than 300ms. We noticed an equivalent change in performance (like with Google) at about 60 req/s when the average response time increases from about 80/100ms to 200ms on average. We are still investigating the strange peaks in response time that happened twice in this test, which affects the number of requests handled per second.

Microsoft Azure Functions vs Google Cloud Functions: Function code compatibility

There is no support or effort to make Azure Functions compatible with AWS Lambda and Google Cloud Functions. From the code point of view, the function content can be refactored to be mostly independent from the infrastructure. Trigger messages are handled automatically and mapped to parameters.

Language support is different because of different developer communities. Node.js is supported by all of the platforms (with the obvious difference and similarities in function content implementation) and thanks to the release of .NET Core, also released as open source, C# also has gained global interest.

We can improve code modularity, moving content code in separate functions, packages, or libraries, using the function code for parameter handling and content code invocation. So, when you really need to migrate, you will need to make minor changes to adapt your code.

Conclusion

We can summarize the functionalities discussed in this post with the following table:

| Feature | AWS Lambda | Google Cloud | Azure Functions |

|---|---|---|---|

| Scalability & availability | Automatic scaling (transparently) | Automatic scaling | Manual or metered scaling (App Service Plan), or sub-second automatic scaling (Consumption Plan) |

| Max # of functions | Unlimited functions | 1000 functions per project | Unlimited functions |

| Concurrent executions | 1000 parallel executions per account, per region (soft limit) | No limit | No limit |

| Max execution | 300 sec (5 min) | 540 seconds (9 minutes) | 300 sec (5 min) |

| Supported languages | JavaScript, Java, C#, and Python | Only JavaScript | C#, JavaScript, F#, Python, Batch, PHP, PowerShell |

| Dependencies | Deployment Packages | npm package.json | Npm, NuGet |

| Deployments | Only ZIP upload (to Lambda or S3) | ZIP upload, Cloud Storage or Cloud Source Repositories | Visual Studio Team Services, OneDrive, Local Git repository, GitHub, Bitbucket, Dropbox, External repository |

| Environment variables | Yes | Not yet | App Settings and ConnectionStrings from App Services |

| Versioning | Versions and aliases | Cloud Source branch/tag | Cloud Source branch/tag |

| Event-driven | S3, SNS, SES, DynamoDB, Kinesis, CloudWatch, Cognito, API Gateway, CodeCommit, etc. | Cloud Pub/Sub or Cloud Storage Object Change Notifications | Blob, EventHub, Generic WebHook, GitHub WebHook, Queue, Http, ServiceBus Queue, Service Bus Topic, Timer triggers |

| HTTP(S) invocation | API Gateway | HTTP trigger | HTTP trigger |

| Orchestration | AWS Step Functions | Not yet | Azure Logic Apps |

| Logging | CloudWatch Logs | Stackdriver Logging | App Services monitoring |

| Monitoring | CloudWatch & X-Ray | Stackdriver Monitoring | Application Insights |

| In-browser code editor | Yes | Only with Cloud Source Repositories | Functions environment, App Service editor |

| Granular IAM | IAM roles | Not yet | IAM roles |

| Pricing | 1M requests for free, then $0.20/1M invocations, plus $0.00001667/GB-sec | 1M requests for free, then $0.40/1M invocations, plus $0.00000231/GB-sec | 1 million requests for free, then $0.20/1M invocations, plus $0.000016/GB-s |

Azure Functions is the logical evolution of PaaS programming in Azure and the serverless proposal for custom code execution. As with many other services in Azure, it will improve over time as development continues with feedback from early adopters.

If you enjoyed this post, feel free to comment and let us know what you think of the serverless revolution. We are happily using Lambda Functions in the Cloud Academy platform as well, and we can’t wait to see what will happen in the near future (if you don’t know it yet, have a look at the Serverless Framework).