Amazon Rekognition: the new Image Analysis tool powered by Deep Learning

Amazon itself has been powered by Machine Learning technologies for almost two decades. AWS started releasing part of that powerful technology as a service, starting from Amazon Machine Learning, two years ago.

Just a few weeks ago, AWS announced the new P2 instance types (up to 16 GPUs) and a Deep Learning AMI. Less than a week ago, Amazon also announced their investment in MXNet, as their preferred Deep Learning framework.

During the first re:Invent 2016 Keynote, Andy Jassy announced a whole new set of services based on Deep Learning: Rekognition, Polly, and Lex.

Here is a recap of the main functionalities offered by Amazon Rekognition.

What is Amazon Rekognition?

Given the explosive growth of images in the past few years, the ability to search, verify, and organize millions of images will unlock a whole new set of possibilities. This is especially true because the technology is offered to everyone as a simple set of APIs. In 2017 alone, we expect that 1.2 trillion images will be taken.

Amazon Rekognition is a fully managed service that will allow you to extract information from images to make your application smarter and your customers’ experience better. Rekognition comes with built-in object and scene detection and facial analysis capabilities.

Also, because Amazon Rekognition is powered by deep learning, the underlying models will keep improving in accuracy over time, offering a better service in a transparent way.

A few more interesting details about Amazon Rekognition:

- It allows both real-time and batch processing.

- It’s already available in three AWS Regions (US East, US West, and EU West).

- It includes a free tier of 5,000 images per month for the first 12 months of usage.

- It’s already well integrated with S3, Lex, and Polly.

- It does NOT store your images or any sensitive information contained in them, but only a vector representation of the images you upload, which is then used for indexing, search, etc.

Watch this short video where instructor Jeremy Cook explains what the Amazon Rekognition Service is, as part of the Introduction to Amazon Rekognition Course.

The AWS Console looks nice and intuitive:

Amazon Rekognition API Overview

So, what can you do with Amazon Rekognition?

The two main features allow you to detect objects and faces in any given picture.

Specifically, object and scene detection will provide a set of labels and concepts found in the image. On the other hand, facial analysis allows you to find, compare, and search faces.

Let’s recap each API.

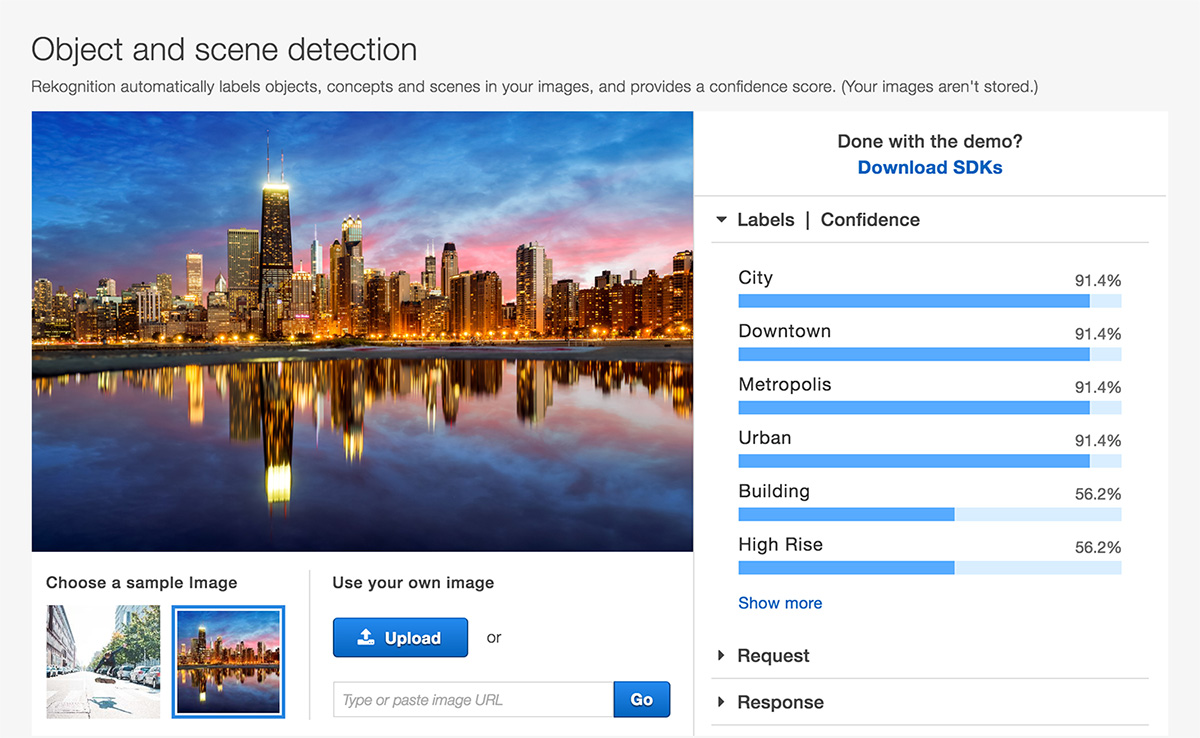

DetectLabels

This API takes individual images as input and returns an ordered list of labels and a corresponding numeric confidence index.

As shown in the screenshot above, this API will return a given number of labels starting from the most certain (or confident). The JSON output will look something like the following:

{

"Labels": [

{

"Confidence": 91.47468566894531,

"Name": "City"

},

{

"Confidence": 91.47468566894531,

"Name": "Downtown"

},

{

"Confidence": 91.47468566894531,

"Name": "Metropolis"

},

{

"Confidence": 91.47468566894531,

"Name": "Urban"

},

{

"Confidence": 56.20014190673828,

"Name": "Building"

}

]

}

In this case, the first four labels have a pretty high level of confidence – over 90% – while the fifth is only 56%. As I mentioned above, the list of labels is ordered by confidence and, in most cases, you can simply ignore labels below an arbitrary threshold.

Typical use cases for this API are smart search applications and automatic tagging. For example, you may want to implement dynamic search indexing with AWS Lambda and Elasticsearch, which generate newly indexed items each time a new file is loaded into S3.

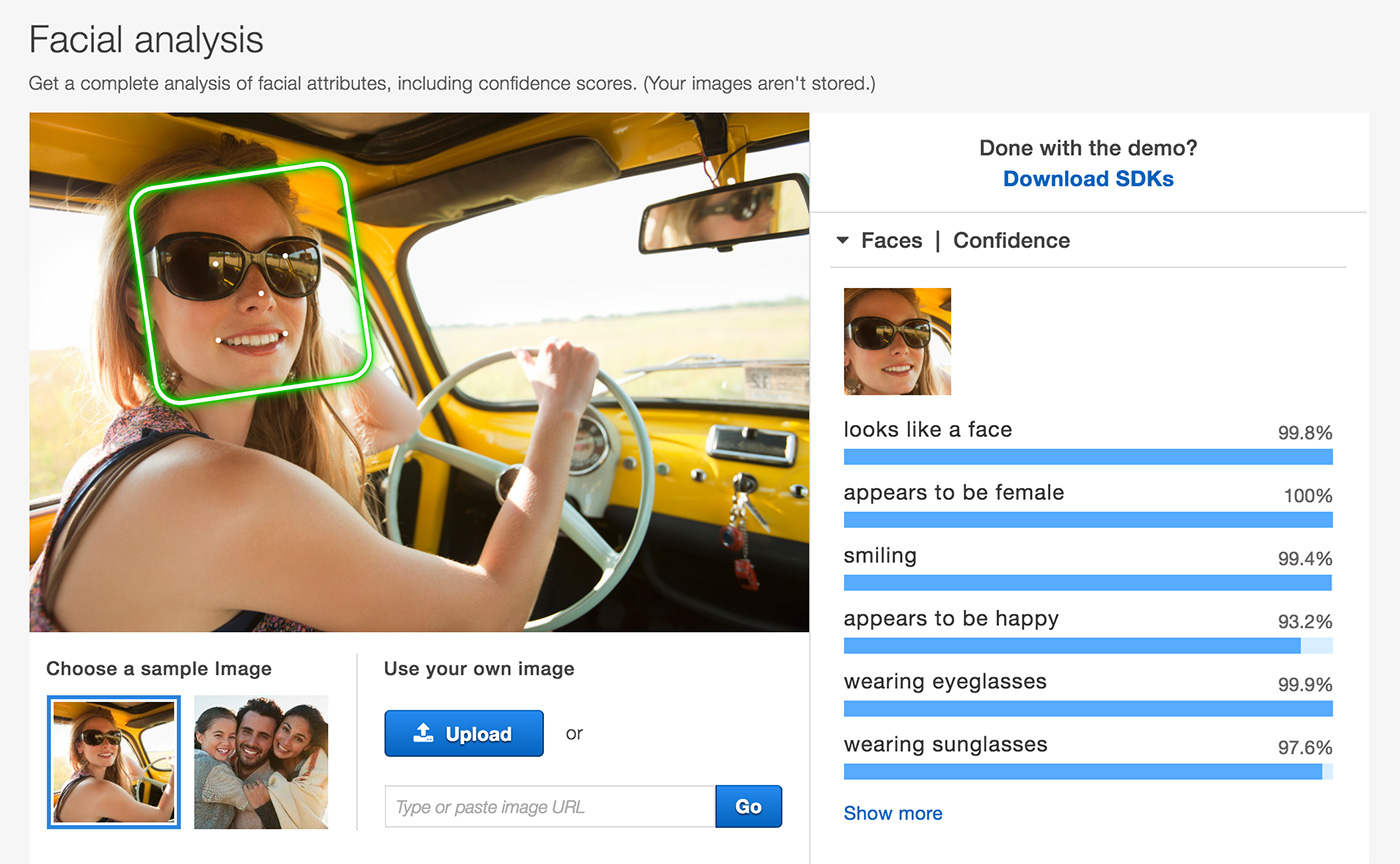

DetectFaces

This API takes individual images as input and will detect the presence and location of faces. In addition to the face bounding box and landmarks, the API will also return a set of quality attributes about each face, such as detected emotions, gender, glasses, open or closed eyes, mustache, beard, smiling, etc.

This API will return a JSON similar to the following:

{

"FaceDetails": [

{

"Beard": {

"Confidence": 97.11119842529297,

"Value": false

},

"BoundingBox": {...},

"Confidence": 99.8899917602539,

"Emotions": [

{

"Confidence": 93.29251861572266,

"Type": "HAPPY"

},

{

"Confidence": 28.57428741455078,

"Type": "CALM"

},

{

"Confidence": 1.4989674091339111,

"Type": "ANGRY"

}

],

"Eyeglasses": {

"Confidence": 99.99998474121094,

"Value": true

},

"EyesOpen": {

"Confidence": 96.2729721069336,

"Value": true

},

"Gender": {

"Confidence": 100,

"Value": "Female"

},

"Landmarks": [

{

"Type": "eyeLeft",

"X": 0.23941855132579803,

"Y": 0.2918034493923187

},

{

"Type": "eyeRight",

"X": 0.3292391300201416,

"Y": 0.27594369649887085

},

{

"Type": "nose",

"X": 0.29817715287208557,

"Y": 0.3470197319984436

},

...

],

"MouthOpen": {

"Confidence": 72.5211181640625,

"Value": true

},

"Mustache": {

"Confidence": 77.63107299804688,

"Value": false

},

"Pose": {

"Pitch": 8.250975608825684,

"Roll": -8.29802131652832,

"Yaw": 14.244261741638184

},

"Quality": {

"Brightness": 46.077880859375,

"Sharpness": 100

},

"Smile": {

"Confidence": 99.47274780273438,

"Value": true

},

"Sunglasses": {

"Confidence": 97.63555145263672,

"Value": true

}

}

]

}

Typical use cases for this API are photo recommendations based on facial attributes and sentiment analysis of in-store customers.

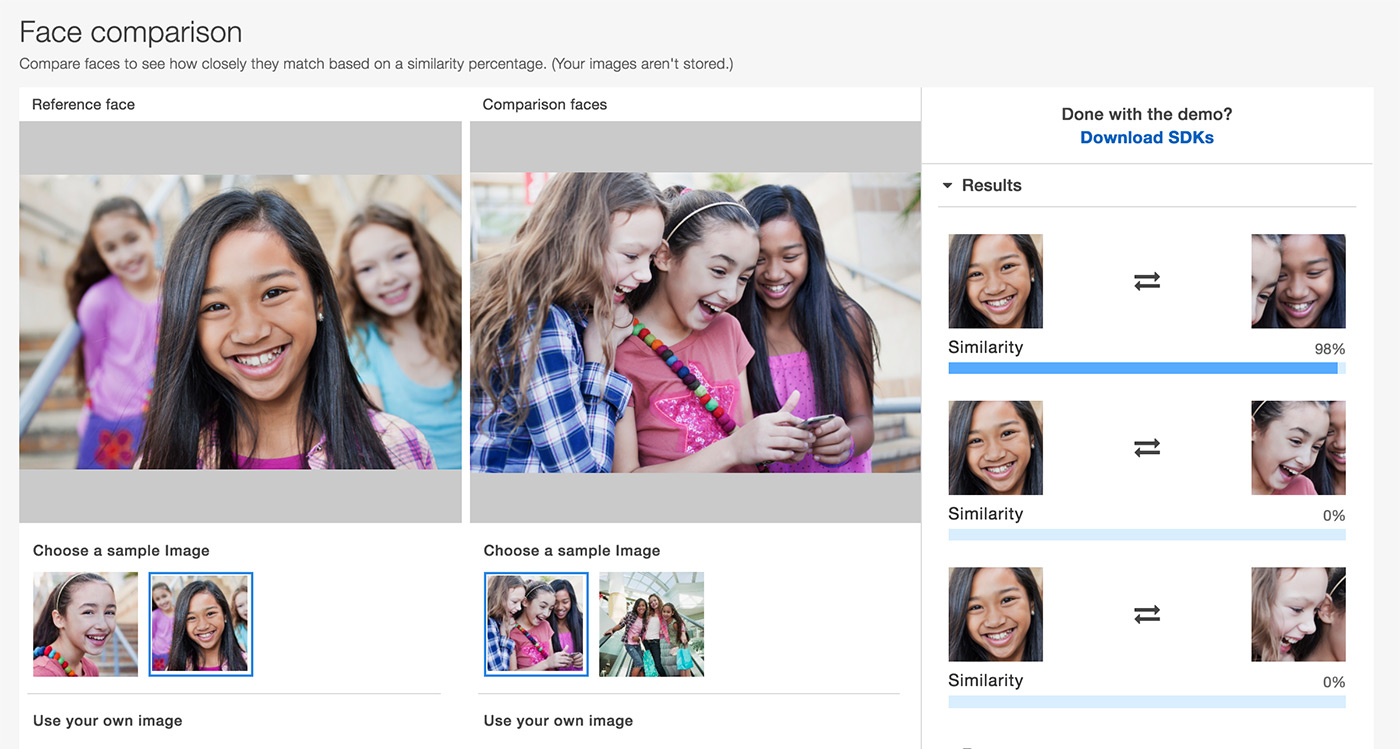

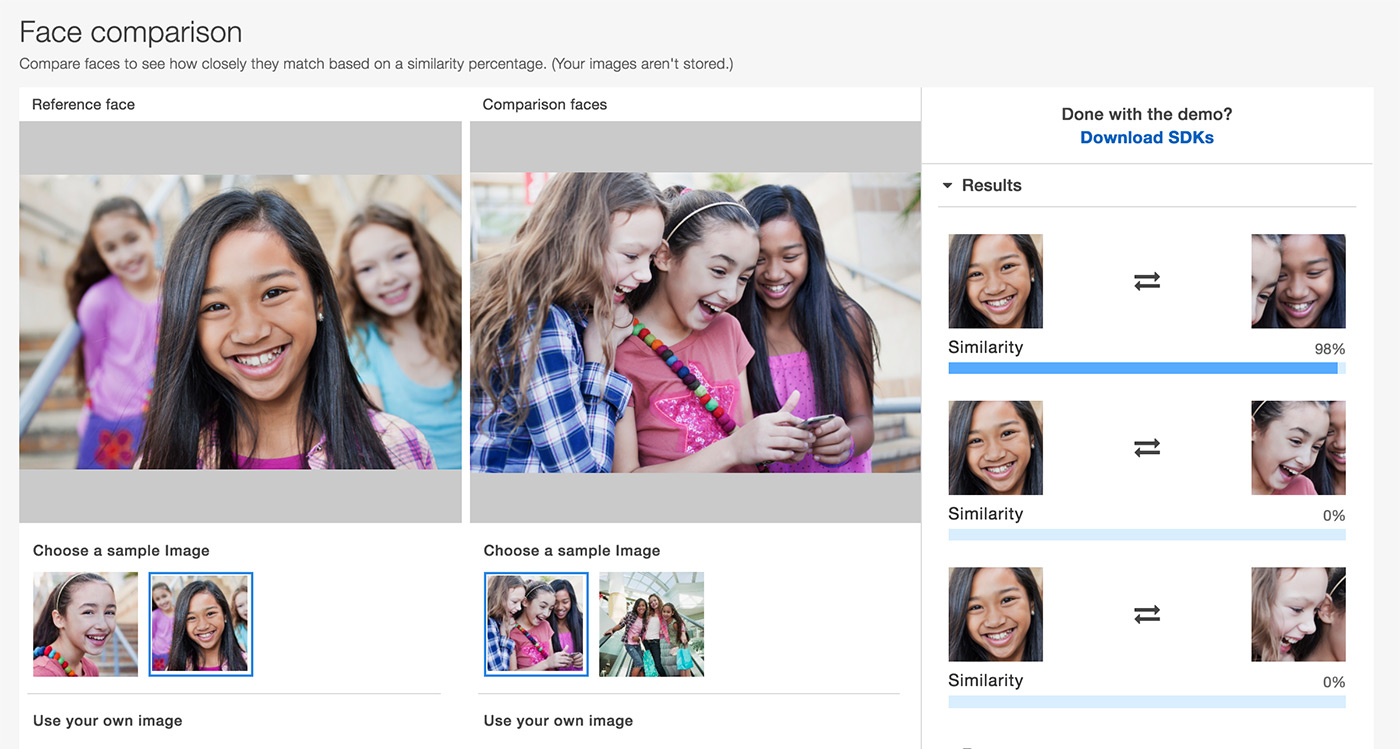

CompareFaces

This API is probably the most interesting, as it allows you to upload two images. The source image will contain one reference face, which will be compared to every face found in the second target image. For each target face, a Similarity Index will be computed.

Interestingly, the face comparison problem can be much harder for humans than for machines, unless you only consider your family and close friends. Outside a person’s close circle of friends or ethic group, comparing faces becomes more difficult.

Amazon’s deep learning model, on the other hand, has been trained on a huge dataset of images that contains faces of different qualities, sizes, distortion conditions, and ethnic groups, which gives it an impressive 98% average accuracy.

The API will return a JSON similar to the following:

{

"FaceMatches": [

{

"Face": {

"BoundingBox": {...},

"Confidence": 99.99597930908203

},

"Similarity": 92

},

{

"Face": {

"BoundingBox": {...},

"Confidence": 99.97322845458984

},

"Similarity": 0

},

{

"Face": {

"BoundingBox": {...},

"Confidence": 99.94225311279297

},

"Similarity": 0

}

],

"SourceImageFace": {

"BoundingBox": {...},

"Confidence": 99.93081665039062

}

}

Typical use cases for this API are face-based verification (i.e. seamless access for hotel rooms, online exam identification, etc.) and person localization for public safety.

IndexFaces, SearchFacesByImage, and SearchFacedByID

This set of APIs allows you to build a searchable collection of faces and then query this index either by image or by ID.

Once you have uploaded a large set of images, AWS will extract facial information and build a searchable index. You can query this index by uploading a face image, and Amazon Rekognition will return all of the original images where the given face was found. Also, you can query the index by ID, which is the unique identifier associated with the vector representation of a face. You may want to use such an index to map between these IDs and the input images since Amazon Rekognition will NOT store any image you upload and you will probably need to map IDs back to the original face image.

Please note that you won’t need to call IndexFaces and DetectFaces separately, as you can directly obtain the same facial features by invoking IndexFaces with a special parameter.

Conclusion

Amazon Rekognition looks great. It is a fully managed and highly scalable service that offers advanced image analysis functionalities in a secure and low-cost fashion.

Earlier this year, I wrote about the Google Vision API, and I am definitely looking forward to performing some benchmarks. However, it already looks like Amazon’s solution comes with more advanced features from day one.

Let us know what you think of Amazon Rekognition and how you’re going to use it in production. Also, stay tuned for more exciting announcements during today’s second Keynote at AWS re:Invent 2016.