AWS re:Invent 2023: New announcements – Adam Selipsky Keynote

As both predicted and anticipated, the AWS re:Invent Keynote delivered by AWS CEO Adam Selipsky was packed full of new announcements. This marked the 2nd keynote of re:Invent 2023, with the first delivered by Peter DeSantis. To learn more about what Peter had to say, read here.

I think we were all expecting there to be emphasis on generative AI announcements, and this keynote certainly delivered on this point! With gen AI making up a large quantity of the new announcements, we can clearly see that AWS is taking this technology by the horns and not letting go.

In this post I want to review and highlight the main announcements which include:

So let’s get started from the top, with the first announcement of Amazon S3 Express One Zone.

This is a brand new Amazon S3 storage class designed with exceptional performance in mind. It is aimed to deliver single digit millisecond latency for workloads and applications that require such demands, such as AI/ML training, media processing, HPC and more. When compared to the S3 Standard storage class, Express One Zone (EOZ) can bring you savings of up to 50% and increase your performance by 10x. That means you now have a storage solution that seamlessly handles millions of requests per second with unparalleled efficiency, all while maintaining a consistently low, single-digit millisecond latency.

This storage class will ensure your data is stored within a single availability zone, as expected, and replicate your data multiple times within that AZ maintaining the durability and availability of your data that we have come to expect from Amazon S3. As a part of this new storage class, AWS has also introduced a new bucket type in order to ensure its performance efficiency. These new ‘directory buckets’ have been created to support thousands of requests per second, as a result this is specific to the EOZ class.

AWS always has a focus on optimizing performance and cost, and this is what drove them to develop the Graviton series of chips for its EC2 compute offerings. This year, we have another Graviton chip to add to the family, AWS Graviton 4. Boasting performance of 96 Neoverse V2 cores, 2 MB of L2 cache per core, and 12 DDR5-5600 channels, this chip–which is now the most powerful and energy efficient chip offered by AWS–gives its customers even more options when it comes to selecting the right compute power. When compared with the performance of its predecessor, Graviton3, the new Graviton4 chip is 40% faster for databases, 30% faster for web applications, and 45% faster for large Java applications.

This announcement followed on nicely from the release of the new chip, as this 8th generation Rxg EC2 instance type, the R8g, was built using Graviton4. It will be released in a variety of sizes and will contain 3 times as many vCPUs and 3 times as much memory as an R7g EC2 instance, making this the best price performance for memory-optimized workloads. This EC2 instance is ideal for any workloads that are memory-intensive, such as high-resolution streaming video, ML/AI, and real-time analytics. As always, security is a number one priority, and with the R8g being built using AWS Graviton4 processors, it also comes with enhanced security and encryption.

With the final announcement from a chip and EC2 instance perspective, Adam announced the new purpose-built chips for generative AI and ML learning, AWS Trainium2. AWS developed Trainium to improve performance and reduce cost for training workloads. They are heavily used for deep learning, large language models (LLMs), and generative AI models. With the new emphasis of gen AI in the industry, this chip will be great news for a lot of businesses looking to harness the benefits that this technology can bring. This new chip has been optimized for training foundation models with hundreds of billions, or even trillions of parameters, and is up to 4x faster than the previous Trn1 chip.

There were a number of announcements made around the customization capabilities of Amazon Bedrock, which is a service that allows you to access different foundation models (FMs) via API calls, including those curated by AWS in addition to those provided by 3rd parties. These announcements included:

Fine-Tuning: Creating a Fine-tuned model with Amazon Bedrock provides you with the ability to increase the accuracy of your models by allowing you to provide your own labeled business training data for different tasks. This customization training allows your models to learn the most appropriate response that’s specific to your own organizational tasks.

RAG with Knowledge Bases: RAG is a framework used within AI that enables you to supply additional factual data to a foundation model from an external source to help it generate responses using up-to-date information. A foundation model is only as good as the data that it has been trained on, so if there are irregularities in your responses, you can supplement the model with additional external data which allows the model to have the most recent, reliable and accurate data to work with. Knowledge Bases for Amazon Bedrock is a new feature that simplifies the implementation and management of RAG. It’s a fully-managed capability that manages custom data sources, data ingestion, and prompt augmentation, preventing you from having to implement your own custom integrations.

Continued Pre-training: When creating a Continued Pre-training model, you can use your own unlabeled data to train your model with content and knowledge that does not currently exist in the underlying foundation models. This allows you to use company documents, processes, and other documents that contain organization-specific data to improve the accuracy and effectiveness of your trained model. You also have the ability to add more and more unlabeled data to your model to allow you to retrain it to keep it as relevant and accurate as possible.

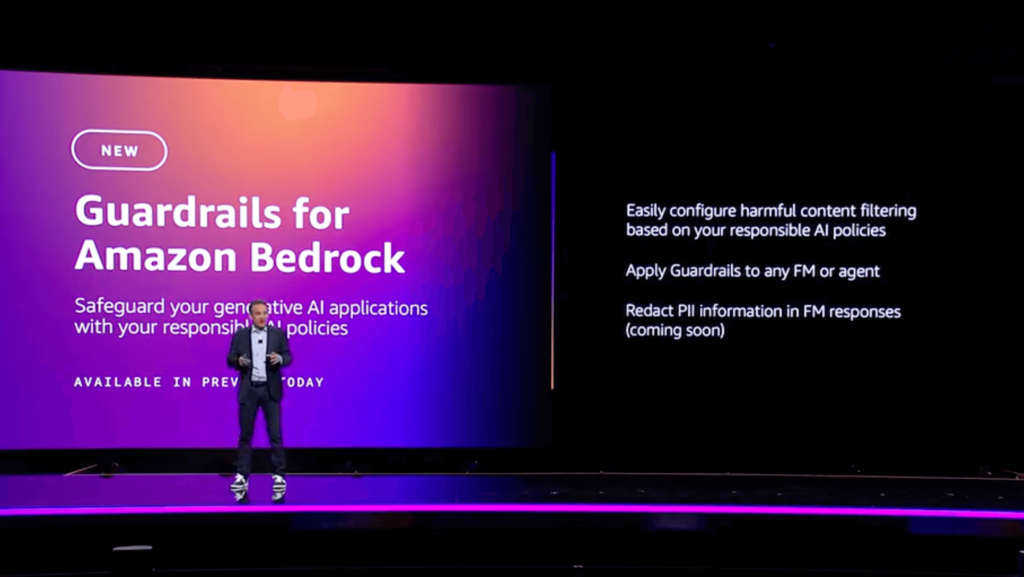

Responsible AI is designed to set out the principles and practices when working with artificial intelligence to ensure that it is adopted, implemented, and executed fairly, lawfully, and ethically, ensuring trust and transparency is given to the business and its customers. Considerations to how AI is used and how it may affect humanity must be governed and controlled by rules and frameworks. Trust, assurance, faith, and confidence should be embedded with any models and applications that are built upon AI.

With this in mind, AWS has released Guardrails for Amazon Bedrock, which has been designed to promote responsible AI when building applications on top of foundation models. Using different customized safeguards you can define topics, categories, and content with different filters, ensuring that only relevant content is presented to your users while protecting them from harmful and unacceptable content. These safeguards can be aligned to your own internal organization or company policies, allowing you to maintain your own principles.

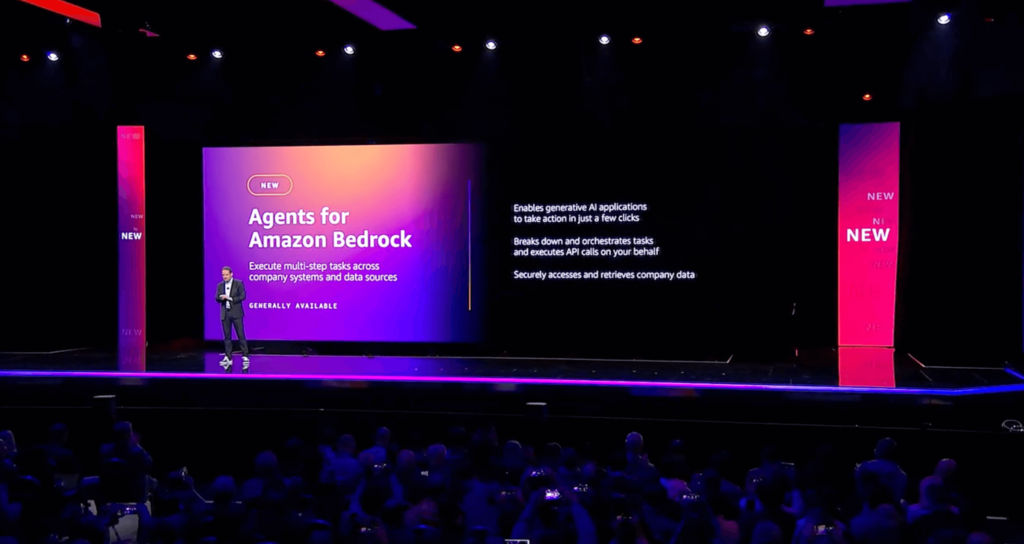

Amazon Bedrock Agents allow you to implement and define your own autonomous agents within your gen AI applications to help with task automation. These agents can then be used to facilitate end-users in completing tasks based on your own organizational data. The agents will also manage the different interactions between the end user, data sources, and the underlying foundation models, and can also trigger APIs to reach out to different knowledge bases for more customized responses. When configuring Agents, you can give your Agent specific instructions on what it is designed to do and how to interact. Using advanced prompts, you can provide your Agent with additional instructions at each step of the orchestration of your application.

There were 4 different ‘Q’ services announced during the Keynote. The first was Amazon Q.

Amazon Q is a generative AI-powered assistant powered by Amazon Bedrock that is designed and tailored specifically for your own internal business. Using your own company data to form a part of its knowledge base, it can be used to help you resolve queries, solve problems, and take action, all by interacting with Q via a chat prompt. By understanding your business processes, policies and more, it can be used by anyone to help you refine, streamline, and optimize your day to day operations and tasks by getting fast and immediate information found throughout your organization’s documentation and information. Connecting it to company data sources is made easy using 40 built-in connectors that connect to platforms such as Slack, Github, Microsoft Teams, and Jira.

Falling under the same umbrella as Amazon Q, this is a new AI-assistant offering that will allow application developers to streamline and simplify the daunting tasks that are involved when it comes to upgrading your application code. Application upgrades of code can take days or even weeks depending on how many applications you need to upgrade; however, using Amazon Q Code Transformation, this can be reduced to just minutes. If you are running Java version 8 or 11 application code, then you can use Amazon Q Code Transformation to upgrade your code to Java version 17. AWS is also working on the capability to also upgrade from Windows-based .NET frameworks to cross-platform .NET in the coming months. When automating code upgrades, Code Transformation will analyze your existing code, formulate a plan, update packages and dependencies, deprecate inefficient code elements, and adopt security best practices. Upon completion, you are able to review and accept any changes before deploying the upgrade into your production environment.

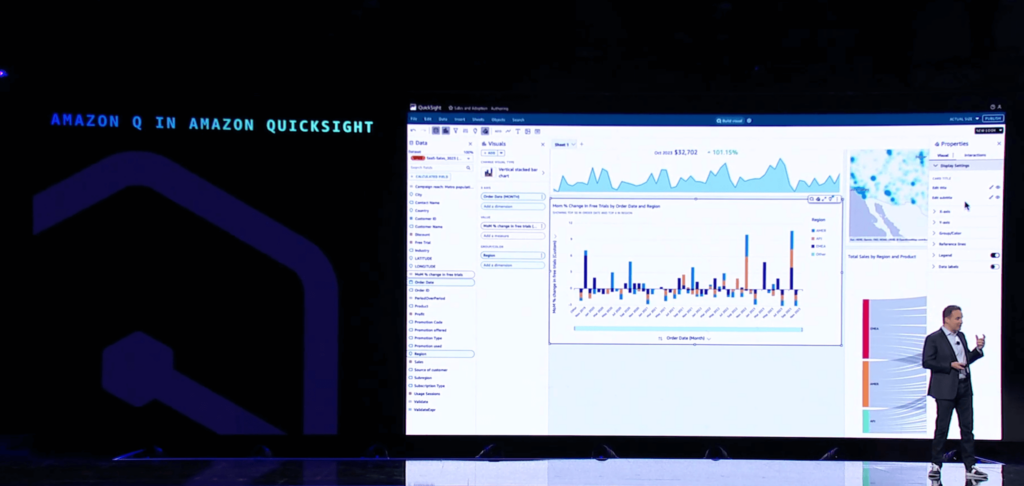

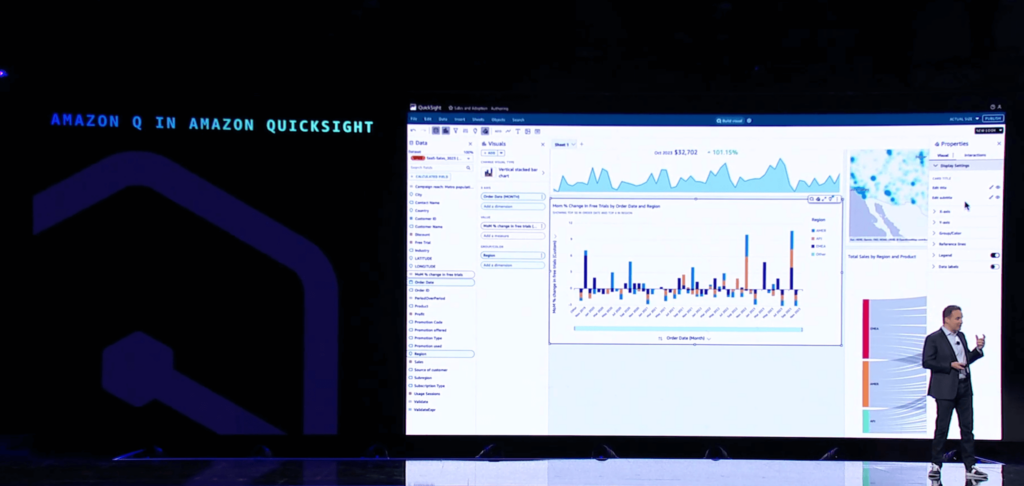

The 3rd Amazon Q announcement brings you Amazon Q in Amazon QuickSight, providing you a generative AI-powered business intelligence assistant. This makes understanding your data within QuickSight easy to navigate and present. Asking Q to provide, discover, and analyze data gets you the results you need quickly and conveniently thanks to natural language processing of your user input. You can continue to converse with Q in QuickSight, refining your requirements based on the results as if you were having a conversation as it contextualizes your previous requests. This enables anyone to be able to create their own dashboards, collect visuals, and gain actionable insights from your company data without requiring BI teams to perform any data manipulation tasks for you.

The final installment of Amazon Q was Amazon Q in Amazon Connect, which is designed to enhance the experience between contact centers and their customers using large language models (LLMs). LLMs are used by generative AI to generate text based on a series of probabilities, enabling them to predict, identify, and translate consent. They are often used to summarize large blocks of text, to classify text to determine its sentiment, and to create chatbots and AI assistants. This enables Amazon Q in Amazon Connect to detect customer intent and use data sources containing organizational information, such as product manuals or catalog references, to respond with recommended content for the customer support agent to communicate back to the customer. These recommended responses and actions are delivered quickly, helping to reduce the waiting time for the customer and increase customer satisfaction, enhancing the customer experience.

The final announcement involving generative AI was Amazon DataZone AI recommendations. Now available in preview, this new feature enhances Amazon DataZone’s ability to generate a catalog for your business data that’s stored in S3, RDS, or third-party applications like Salesforce and Snowflake by leveraging generative AI and LLMs within Amazon Bedrock to create meaningful business descriptions for your data and its schemas. Previously, DataZone could only generate table and column names for your business data catalog. This added capability provides additional context by describing the meaning of the fields within your tables and their schemas, helping data scientists and engineers analyze data that may not otherwise have enough metadata to properly clarify its meaning. This promises to streamline the process of data discovery and analysis, making your business data more accessible and easier to understand.

The final two announcements both involved new zero-ETL integrations with AWS services, the first being Amazon DynamoDB zero-ETL integration with Amazon OpenSearch Service. ETL, short for “extract, transform, and load,” refers to the often time-consuming and expensive process of building data pipelines to sanitize and normalize data that may come from many disparate sources in order to perform further analysis on the data or to use it in your AI or ML workloads. This new feature is now generally available and allows you to leverage the power of Amazon OpenSearch Service, including full-text, fuzzy, and vector search, to query data you have stored in DynamoDB without needing to build a costly ETL pipeline first. You can enable this integration directly within the DynamoDB console, which leverages DynamoDB Streams and point-in-time recovery to synchronize data using Amazon OpenSearch Ingestion. You can specify mappings between fields in multiple DynamoDB tables and Amazon OpenSearch Service indexes. According to AWS, data in DynamoDB will be synchronized to your Amazon OpenSearch Service managed cluster or serverless collection within seconds.

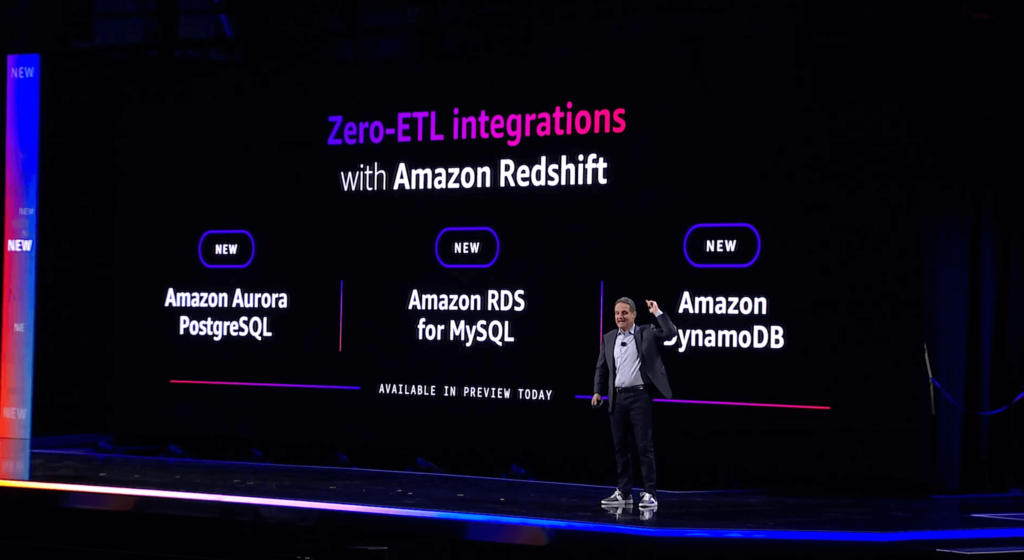

Last but not least are some additional new zero-ETL integrations, this time with Amazon Redshift. Many organizations leverage Redshift to perform data analytics, but have generally needed to build ETL pipelines to connect data from sources such as Amazon Aurora, RDS, and DynamoDB. With this announcement, customers can now take advantage of new features to replicate their data from the following sources directly into Amazon Redshift:

All of these integrations offer fully-managed solutions for replicating your data into Redshift data warehouses, unlocking the potential for data analysts to gain insight into your business data using analytics queries and machine learning models.