How do you bind together tools like Jenkins, Junit, Maven, GitHub, and S3 – along with Docker and Dockerfiles – to make the continuous integration process actually work?

In an earlier post on continuous integration using Docker, I wrote about the ingredients you’ll need for effective Docker/Jenkins deployments. Now I’ll discuss how the continuous integration process actually works.

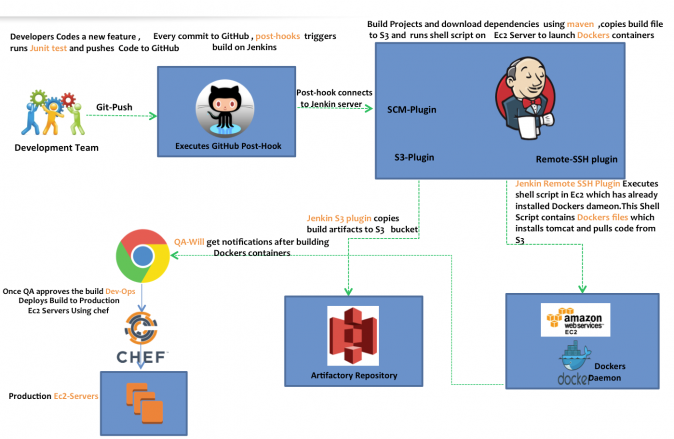

Continuous integration: the deployment process

The process starts when a developer checks out code from GitHub and adds a new feature. After launching a successful build on local dev systems, he (or she) will run a feature and performance test using Junit.

Once Junit is happy with the results, the developer pushes the new code back up to GitHub. Post-Hook (configured on GitHub) connects to the Jenkin server. Each check-in is then verified by an automated build, allowing teams to detect problems early. By integrating regularly, you can detect errors quickly, and locate them more easily.

Using the Jenkins GitHub Plugin, you can automatically trigger build jobs when pushes are made to GitHub.

The Jenkins server uses Maven to build its Java projects. Using Maven only requires that you create a pom.xml file and place your source code in the default directory. Maven will automatically take care of the rest:

Jenkin’s S3 plugin will copy build artifacts to an S3 bucket. The Jenkins Remote SSH plugin will log in to our Ec2 instance which Docker’s daemon installed and execute a Shell Script

This Shell Script contains Dockerfiles – Docker can build servers automatically by reading the instructions from a Dockerfile (a Dockerfile is a text document that contains all the commands you would normally execute manually in order to build a server image).

The Dockerfile updates the OS, installs Tomcat and Java, pulls the code from S3, deploys to our web apps directory, and, finally, restarts Tomcat.

Once QA approves the build, the Dev-Ops team uses chef-cookbook to deploy the code on to production Ec2 servers

Why introduce Docker into the continuous integration workflow

- In Developer environments, we want to remain as close as possible to production. We also want the development environment to be as fast as possible for interactive use.

- Running multiple applications on the developer machine can slow it down. With Docker, you can easily launch a fresh server for every build.

- Maintain one dedicated Docker container for each application.

- Before VMs, bringing up a new hardware resource took days. Virtualization brought this down to minutes. Docker, by creating just a container for the process and not booting up an OS, brings it down to seconds.

- Ops benefits: only one standardized Dev environment to support.

- Management benefits: faster time to market; happier engineers.

- Developer benefits: detect problems early. Regular integration lets you detect and locate errors quickly.