Build powerful applications that see and understand the content of images with the Google Vision API

The Google Vision API was released last month, on December 2nd 2015, and it’s still in limited preview. You can request access to this limited preview program here and you should receive a very quick email follow-up.

I recently requested access with my personal Google Cloud Platform account in order to understand what types of analysis are supported. This also allows me to perform some tests.

Image analysis and features detection

The Google Vision API provides a RESTful interface that quickly analyses image content. This interface hides the complexity of continuously evolving machine learning models and image processing algorithms.

These models will improve overall system accuracy – especially as far as object detection – since new concepts almost certainly will be introduced in the system over time.

In more detail, the API lets you annotate images with the six following features.

- LABEL_DETECTION: executes Image Content Analysis on the entire image and provides relevant labels (i.e. keywords & categories).

- TEXT_DETECTION: performs Optical Character Recognition (OCR) and provides the extracted text, if any.

- FACE_DETECTION: detects faces, provides facial key points, main orientation, emotional likelihood, and the like.

- LANDMARK_DETECTION: detects geographic landmarks.

- LOGO_DETECTION: detects company logos.

- SAFE_SEARCH_DETECTION: determines image safe search properties on the image (i.e. the likelihood that an image might contain violence or nudity).

You can annotate all these features at once (i.e. with a single upload), although the API seems to respond slightly faster if you focus on one or two features at a time.

At this time, the API only accepts a series of base64-encoded images as input, but future releases will be integrated with Google Cloud Storage so that API calls won’t require image uploads at all. This will offer substantially faster invocation.

Label Detection – Scenarios and examples

Label detection is definitely the most interesting annotation type. This feature adds semantics to any image or video stream by providing a set of relevant labels (i.e. keywords) for each uploaded image. Labels are selected among thousands of object categories and mapped to the official Google Knowledge Graph. This allows image classification and enhanced semantic analysis, understanding, and reasoning.

Technically, the actual detection is performed on the image as a whole, although an object extraction phase may be executed in advance on the client in order to extract a set of labels for each single object. In this case, each object should be uploaded as an independent image. However, this may lead to lower-quality results if the resolution isn’t high enough, or if the object context is more relevant than the object itself — for the application’s purpose.

So what do label annotations look like?

"labelAnnotations": [

{

"score": 0.99989069,

"mid": "/m/0ds99lh",

"description": "fun"

},

{

"score": 0.99724227,

"mid": "/m/02jwqh",

"description": "vacation"

},

{

"score": 0.63748151,

"mid": "/m/02n6m5",

"description": "sun tanning"

}

]

The API returns something very similar to the JSON structure above for each uploaded image. Each label is basically a string (the description field) and comes with a relevance score (0 to 1) and a Knowledge Graph reference.

You can specify how many labels the API should return at request time (3 in this case) and the labels will be sorted by relevance. I could have asked for 10 labels and then thresholded their relevance score to 0.8 in order to consider only highly relevant labels in my application (in this case only two labels would have been used).

Here is an example of the labels given by the Google Vision API for the corresponding image:

The returned labels are: “desk, room, furniture, conference hall, multimedia, writing.”

The first label – “desk” – had a relevance score of 0.97, while the last one – “writing” – had a score of 0.54.

I have programmatically appended the annotations to the input image (with a simple Python script). You can find more Label Detection examples on this public gist.

Personally, I found the detection accurate on every image I uploaded. In some cases though, no labels were returned at all, and very few labels sounded misleading even with a relevance score above 0.5.

Text Detection – OCR as a Service

Optical Character Recognition is not a new problem in the field of image analysis, but it often requires high-resolution images, very little perspective distortion and an incredibly precise text extraction algorithm. In my personal experience, the character classification step is actually the easiest one, and there are plenty of techniques and benchmarks in the literature.

In the case of the Google Vision API, everything is encapsulated in a REStful API that simply returns a string and its bounding box. As I would have expected from Google, the API is able to recognize multiple languages, and will return the detected locale together with the extracted text.

Here is an example of a perfect extraction:

The API response looks very similar to the following JSON structure:

"textAnnotations": [

{

"locale": "en",

"description": "Sometimes\nwhen I Am\nAlone I Google\nMyself\n",

"boundingPoly": {

"vertices": [

{"y": 208, "x": 184},

{"y": 208, "x": 326},

{"y": 314, "x": 326},

{"y": 314, "x": 184}

]

}

}

]

Only one bounding box is detected, in the English language, and the textual content is even split by break lines.

I have been running a few more examples in which much more text was detected in different areas of the image, but it was all collapsed into a single (and big) bounding box, where each text was separated by a break line. This doesn’t make the task of extracting useful information easy, and the Google team is already gathering feedback about this on the official limited preview Google Group (of which I’m proud to be a part).

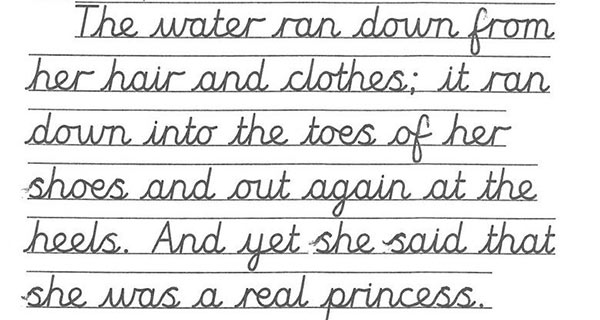

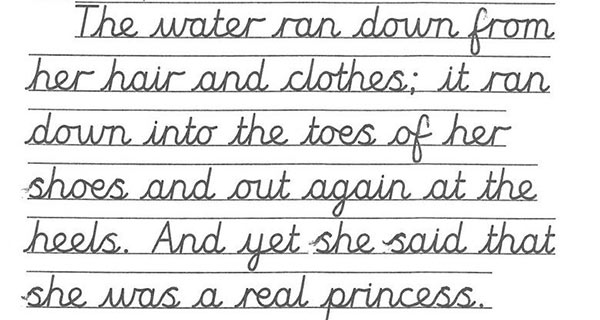

What about handwritten text and CAPTCHAs?

Apparently, the quality is not optimal for handwritten text, although I believe it’s more than adequate for qualitative analysis or generic tasks, such as document classification.

Here is an example:

With the corresponding extracted text:

water ran down

her hair and clothes it ran

down into the toes Lof her

shoes and out again at the

heels. And het she said

that

Mwas a real princess

As I mentioned, it’s not perfect, but it would definitely help most of us a lot.

On the other hand, CAPTCHAs recognition is not as easy. It seems that crowdsourcing is still a better option for now. 😉

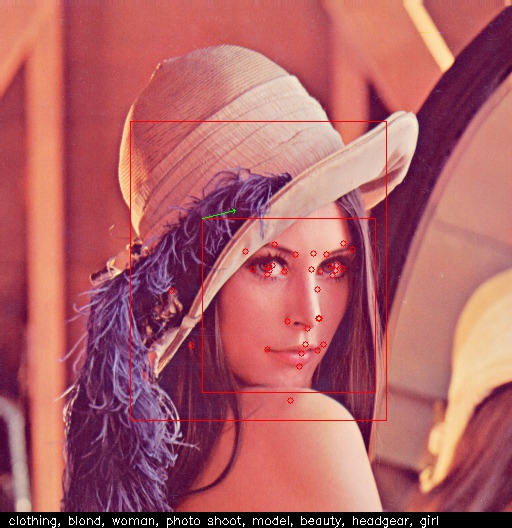

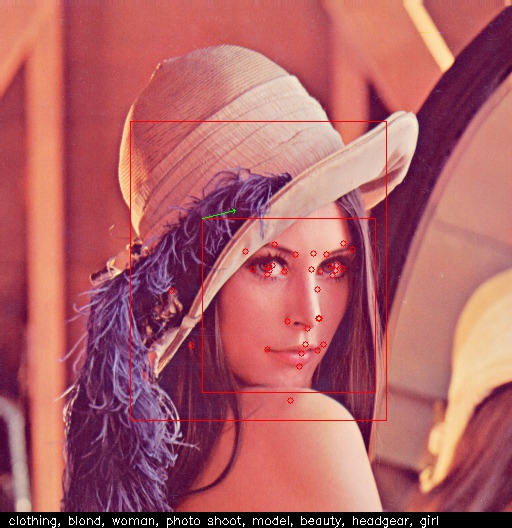

Face Detection – Position, orientation, and emotions

Face detection aims at localizing human faces inside an image. It’s a well-known problem that can be categorized as a special case of a general object-class detection problem. You can find some interesting data sets here.

I would like to stress two important points:

- It is NOT the same as Face Recognition, although the detection/localization task can be thought of as one of the first steps in the process of recognizing someone’s face. This typically involves many more techniques, such as facial landmarks extraction, 3D analysis, skin texture analysis, and others.

- It usually targets human faces only (yes, I have tried primates and dogs with very poor results).

If you ask the Google Vision API to annotate your images with the FACE_DETECTION feature, you will obtain the following:

- The face position (i.e. bounding boxes);

- The landmarks positions (i.e. eyes, eyebrows, pupils, nose, mouth, lips ears, chin, etc.), which include more than 30 points;

- The main face orientation (i.e. roll, pan, and tilt angles);

- Emotional likelihoods (i.e. joy, sorrow, anger, surprise, etc), plus some additional information (under exposition likelihood, blur likelihood, headwear likelihood, etc.).

Here is an example of face recognition, where I have programmatically rendered the extracted information on the original image. In particular, I am framing each face into the corresponding bounding boxes, rendering each landmark as a red dot and highlighting the main face orientation with a green arrow (click for higher resolution).

As you can see, every face is correctly detected and well localized. The precision is pretty high even with numerous faces in the same picture, and the orientation is also accurate.

In this example, just looking at the data, we might infer that the picture contains 5 happy people who are most likely facing something or someone around the center of the image. If you complement this analysis with label detection, you would obtain “person” and “team” as most relevant labels, which would give your software a pretty accurate understanding of what is going on.

You can find more face detection example on this public gist.

Alpha testing Conclusions

Although still in limited preview, the API is surprisingly accurate and fast: queries takes just milliseconds to execute. The processing takes longer with larger images, mostly because of the upload time.

I am looking forward to the Google Cloud Storage integration, and to the many improvements already suggested on the Google Group by the many active alpha testers.

I didn’t focus much on the three remaining features yet – geographic landmarks detection, logo detection and safe search detection – but the usage of such features will probably sound straightforward to most of you. Please feel free to reach out or drop a comment if you have doubts or tests suggestions.

You can find all the Python utilities I used for these examples here.

If you are interested in Machine Learning technologies and Google Cloud Platform, you may want to have a look at my previous article about Google Prediction API as well.