Amazon S3 vs Amazon Glacier: which AWS storage tool should you use?

When you set out to design your first AWS (Amazon Web Services) hosted application, you will need to consider the possibility of data loss.

While you may have designed a highly resilient and durable solution, this won’t necessarily protect you from administrative mishaps, data corruption, or malicious attacks against your system. This can only be mitigated with an effective backup strategy.

Thanks to Amazon’s Simple Storage Service (S3) and its younger sibling, Amazon Glacier, you have the right services at hand to establish a cost-effective, yet practical backup solution.

So, what about Amazon S3 vs Amazon Glacier?

Unlike Amazon’s Elastic Block Store (EBS) or the local file system of your traditional PC, where data is managed in a directory hierarchy, Amazon S3 treats data as individual objects.

The abstraction away from the lower layers of storage and the separation of data from its metadata comes with a number of benefits. For one, Amazon can provide a highly durable storage service for the fraction of the cost of block storage. You also only pay for the amount of storage you actually use. Therefore you don’t need to second-guess and pre-allocate disk space.

Amazon S3 vs Amazon Glacier

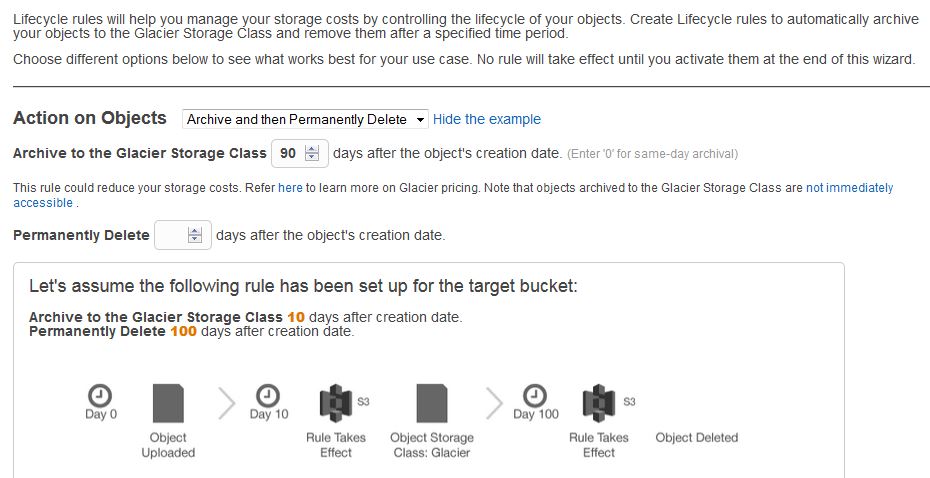

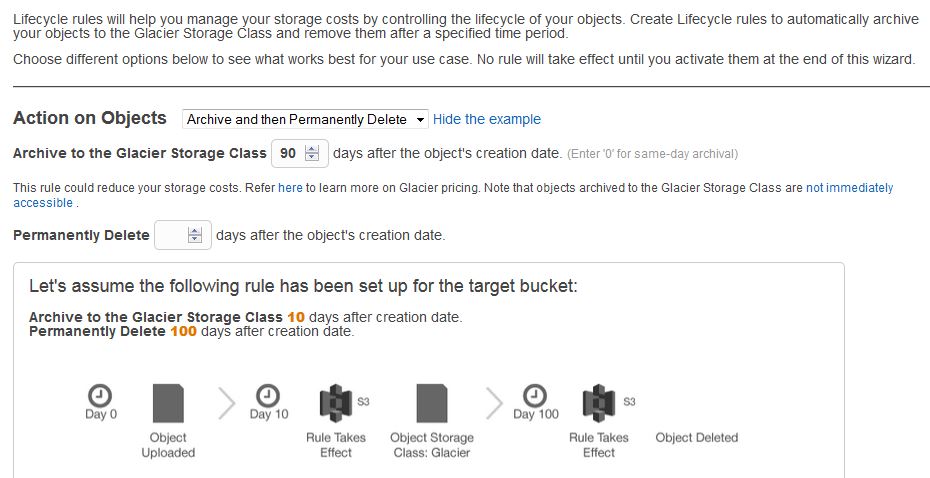

Lifecycle rules within S3 allow you to manage the life cycle of the objects stored on S3. After a set period of time, you can either have your objects automatically delete or archived off to Amazon Glacier.

Amazon Glacier is marketed by AWS as “extremely low-cost storage”. The cost per Terabyte of storage and month is again only a fraction of the cost of S3. Amazon Glacier is pretty much designed as a write once and retrieves never (or rather rarely) service. This is reflected in the pricing, where extensive restores come at an additional cost and the restore of objects require lead times of up to 5 hours.

Amazon S3 with Glacier vs. Amazon Glacier

At this stage, we need to highlight the difference between the ‘pure’ Amazon Glacier service and the Glacier storage class within Amazon S3. S3 objects that have been moved to Glacier storage using S3 Lifecycle policies can only be accessed (or shall I say restored) using the S3 API endpoints. As such they are still managed as objects within S3buckets, instead of Archives within Vaults, which is the Glacier terminology.

This differentiation is important when you look at the costs of the services. While Amazon Glacier is much cheaper than S3 on storage, charges are approximately ten times higher for archive and restore requests. This is re-iterating the store once, retrieve seldom pattern.

Amazon also reserves 32KB for metadata per Archive within Glazier, instead of 8 KB per Object in S3, both of which are charged back to the user. This is important to keep in mind for your backup strategy, particularly if you are storing a large number of small files. If those files are unlikely to require restoring in the short term it may be more cost effective to combine them into an archive and store them directly within Amazon Glazier.

Tooling

Fortunately enough, there is a large variety of tools available on the web that allow you to consume AWS S3 and Glacier services to create backups of your data. They reach from stand-alone, local PC to enterprise storage solutions.

Just bear in mind that whatever third party tool you are using, you will need to enable access to your AWS account. You need to ensure that the backup tool only gets the minimum amount of access to perform its duties. For this reason, it is best to issue a separate set of access keys for this purpose.

You may also want to consider the backup of your data to an entirely independent AWS account. Depending on your individual risk profile and considering that your backups tend to provide the last resort recovery option after a major disaster, it may be wise to keep those concerns separated…particularly to protect yourself against cases like Code Spaces who kept all their data within a single account, that was eventually destroyed.

For reference, we have included instructions below for configuring dedicated backup credentials using my backup tool of choice: CloudBerry.

AWS Identity and Access Management

Identity and Access Management (IAM) allows you to manage users and groups and precisely define policies for the access management of the various services and resources. To get started log on to the AWS Management Console and open the link for IAM. This opens the IAM Dashboard. Once at the Dashboard, you can navigate to Users and select the Create New Users option. Selecting the “Generate an access key for each User” option ensures that an access key is issued for each user at creation time. An access key can also be issued later, in case you change your mind later.

After confirming your choice, you will be given the opportunity to download the Security Credentials, consisting of a unique Access Key identifier and the Secret Access Key itself. Naturally, the Access Key should be stored in a secure place.

By default, new users will not have access to any of account resources. Access is granted explicitly by attaching an IAM policy directly to a user account, or by adding the user to a group with an IAM policy attached. To attach a user policy to a user or group, open the entry and scroll down to the Permissions section. Here you can either create an inline policy for the entry or attach a managed policy object to the user or group.

IAM policies allow for very granular access to AWS resources, so I won’t go into too much detail here. Policies can be defined using pre-built templates or through the policy generation tool. For the purpose of giving your backup tool write-access to your AWS S3 bucket, just select the Custom Policy Option.

This policy grants three different sets of rights:

- Access to AWS S3 to list all buckets for the account,

- Access to the bucket MyBucketName, and

- The ability to read, write and delete objects within the MyBucketName bucket.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["s3:GetBucketLocation","s3:ListAllMyBuckets"],

"Resource": "arn:aws:s3:::*"

},

{

"Effect": "Allow",

"Action": [ "s3:ListBucket" ],

"Resource": [ "arn:aws:s3:::MyBucketName" ]

},

{

"Effect": "Allow",

"Action": [ "s3:PutObject", "s3:GetObject", "s3:DeleteObject"],

"Resource": [ "arn:aws:s3:::MyBucketName/*"]

}

]

}

If, say, you don’t want to give list-access to all available buckets within your account, just omit the first object within the JSON statement (this will mean, however, that a bucket name cannot be selected within the application):

{

"Effect": "Allow",

"Action": ["s3:GetBucketLocation","s3:ListAllMyBuckets"],

"Resource": "arn:aws:s3:::*"

},

You can learn more about IAM by taking our IAM course on CloudAcademy.com.

Finally, Amazon S3 vs Amazon Glacier?

While this post primarily focused on backup options for your hosted environments, this is just one possible example. Since Amazon S3 and Glacier are available worldwide through public API endpoints the possibilities are nearly limitless. Additionally, enterprises can make use of the AWS storage gateway to backup your on-premises data in AWS. Further reading on this post Amazon Storage Options.

Well-known enterprise backup software from vendors like Commvault, EMC or Symantec also provides options to utilize Amazon’s cloud storage as an additional storage tier within your backup strategy.