As Big Data evolves, more tools and technologies aimed at helping enterprises cope are coming on line. Live data needs special attention, because delayed processing can effect its value: a twitter trend will attract more attention if it is associated with something going on right now; a logging system alert is only useful while the error still exists. To tame huge volumes of time-sensitive streaming data, AWS created Amazon Kinesis.

Amazon Kinesis is a fully managed, real-time, event-driven processing system that offers highly elastic, scalable infrastructure. It is designed to process massive amounts of real-time data generated from social media, logging systems, click streams, IoT devices, and more.

The open source Apache Kafka project actually shares some functionality with Amazon Kinesis. While Kafka is very fast (and free), it is still a bundled tool that needs installation, management, and configuration. If you would prefer to avoid the extra administrative burden and already have some AWS Cloud investment, then Kinesis may just be your new best friend.

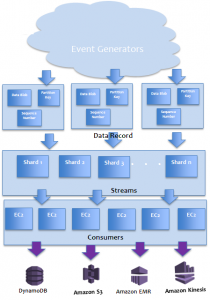

Amazon Kinesis Architecture

Data Records

Data Records contain the information from a single event. A data record consists of a sequence number, a partition key, and a data blob.

- Sequence numbers are created and signed by Kinesis. Event consumers process the records according to the order of the sequence number.

- A partition key is an identifier chosen by event submitters to generate a hash key which will determine to which shard a data record belongs.

- Data Blobs are actual payload objects containing content like log records, tweets, and RFID records. Data blobs do not have a particular format and can be as large as 50KB.

Streams

Streams are the core building block of the Amazon Kinesis service. Data records are written to streams by event producers and read by event consumers. Streams are composed of one or more shards, while Shards are a logical subset of data within a stream. Events in a stream are stored for 24 hours.

Kinesis is meant for real-time data processing – and in real-time events, a stale record possesses little value. Amazon Kinesis streams are identified by Amazon Resource Names (ARN).

Shards

Shards are the objects to which data records are written and consumed by event producers and event consumers. Each shard gets data records according to hash key ranges. Partition keys are taken by Kinesis from data records, formatted to 128-bit hash keys, and associated with a shard for a certain range.

The Kinesis user is responsible for shard allocation, and the number of shards determines the application throughput. According to AWS Kinesis documentation:

Each open shard can support up to 5 read transactions per second, up to a maximum total of 2 MB of data read per second. Each shard can support up to 1000 write transactions per second, up to a maximum total of 1 MB data written per second.

Shards are elastic in nature. You can increase or decrease the number of shards according to your load.

Kinesis Consumers

Kinesis consumers are typically Kinesis application runs on clusters of EC2 instances. A Kinesis consumer uses the Amazon Kinesis Client library to read data from streams’ shards. Actually, streams push data records to a Kinesis application.

When Kinesis applications are created, they are automatically assigned to a stream, and the stream, in turn, associates the consumers with one or more shards. Consumers perform only lighter tasks on data records before submitting them to AWS DynamoDB, AWS EMR, AWS S3, or even a different Kinesis stream for further processing.

Consider a real-life Kinesis example involving a Twitter application: in a Twitter data analysis application, tweets are data records, all tweets form the stream (i.e. Twitter Firehose). The tweets are segregated by topic so each topic name can be used as a partition key. All the tweets belong to set of Twitter topics that are grouped together to form a shard.

Kinesis Operations

Amazon kinesis supports the Java API only. The following operations are performed using the Kinesis client API:

Add Data Record to Stream

Producers call PutRecord to push data to a stream or to shards. Each record should be less than 50 KB. The user then creates a PutRecordRequest and passes {streamName, partitionKey, data} as input. You can also force a strict ordering of records by calling setSequenceNumberForOrdering and passing an incremental atomic number or sequence number of previous record.

Get Records from Shards

Retrieving records (up to 1 MB) from shards or streams requires a shard iterator. Create a GetRecordRequest object, and call the getRecords method by passing the GetRecordRequest object. Obtain the next shard iterator from getRecordsResult to make next call to getRecordResult.

Resharding Streams

Resharding a stream will split or merge shards to match the dynamic event flow to the Kinesis stream. Always split a shard into two shards or merge two shards into one in a single resharding operation. As AWS Kinesis bills you per shard, merging shards cuts your shard cost by half (while splitting doubles the cost). Resharding is an administrative process that can be triggered by CloudWatch monitoring metrics.

Kinesis Connectors

Amazon Kinesis offers three connector types: S3 Connector, Redshift Connector, and DynamoDB connector.

Kinesis Pricing Model

Amazon Kinesis uses a pay-as-you-go pricing model based on two factors: Shard Hours and PUT Payload Units.

- Shard Hour. In Kinesis, a shard provides a capacity of 1MB/sec data input and 2MB/sec data output and can support up to 1000 records per second. Users are charged for each shard at an hourly rate. The number of shards depends on their throughput requirements.

- PUT Payload Unit. PUT Payload Units are billed at a per million PUT Payload Units rate. In Kinesis, a unit of PUT payload is 25KB. So, for example, if your record size is 30KB, you are charged 2 PUT payload units. If your data record is 1 MB, you are charged for 40 PUT payload units.

In the AWS standard region, a shard hour currently costs $0.015. So, for example, let’s say that your producer produces 100 records per second and each data record is 50 KB. This would translate as a 5MB/second input to your Kinesis stream from the producer. As each shard supports 1 MB/sec input, we need 5 shards to process 5000 KB/second (as each shard supports 1000 KB/second). So our shard per hour cost will be $0.075 (0.015*5). 24 hours of processing would therefore cost us $1.80.

Moreover, we need 2 PUT Payload Units for each data record (1 PUT Payload Unit= 25 KB chunk). Again, we’re producing 100 records per second. We are charged 2000 PUT Payload Unit/second. In an hour we are charged 7200000 PUT Payload Unit. Hence we are charged 172800000 PUT Payload Unit per day. The cost will be $2.4192 (172800000/1000000 * 0.014).

So we will be charged a total of (1.8+2.4192) $4.2192 /day for our data processing.

A few Amazon Kinesis use cases:

- Real-time data processing.

- Application log processing.

- Complex Direct Acyclic Graph (DAG) processing.

With the power of real-time data processing through a managed service from AWS, Amazon Kinesis is a perfect tool for storing and analyzing data from social media streams, website clickstreams, financial transactions logs, application or server logs, sensors, and much more.

To gain a better understanding of Amazon Kinesis and get started with building streamed solutions, tale a look at Cloud Academy’s intermediate course on Amazon Kinesis.

Have you used Kinesis yet? Why not share your experience?