Building a serverless architecture for data collection with AWS Lambda

AWS Lambda is one of the best solutions for managing a data collection pipeline and for implementing a serverless architecture. In this post, we’ll discover how to build a serverless data pipeline in three simple steps using AWS Lambda Functions, Kinesis Streams, Amazon Simple Queue Services (SQS), and Amazon API Gateway!

Data generated by web and mobile applications is usually stored either to a file or to a database (often a data warehouse). Because the data comes from different sources, such as the back-end server or the front-end UI, it is often very heterogeneous. Furthermore, this data must typically be synced with multiple services such as a CRM (e.g., Hubspot), an email provider (e.g., Mailchimp), or an analytics tool (e.g., Mixpanel). Moreover, this process must be backward compatible and avoid data loss. For such reasons, building and managing a custom infrastructure that is able to handle the collection of such data could be very expensive.

I would like to propose a solution based on AWS Cloud services with the goal of creating a serverless architecture. This also fits a typical use case where the data flow involves thousands or more events per minute.

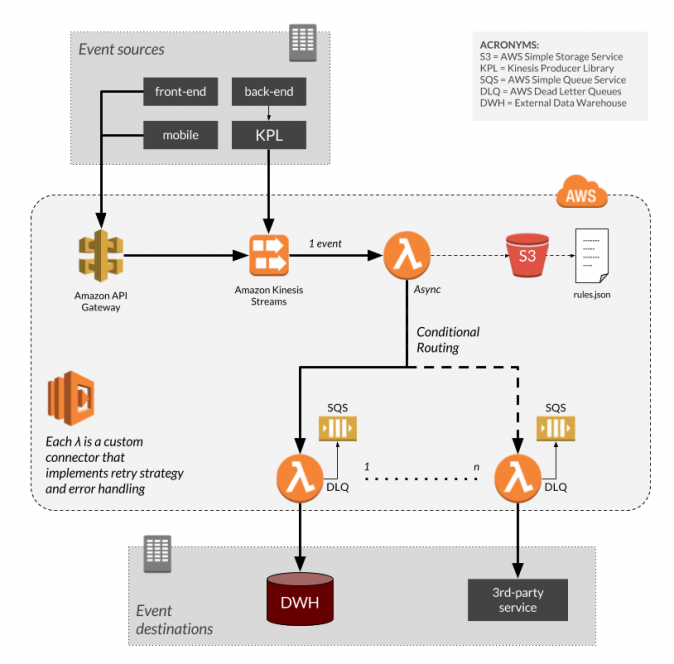

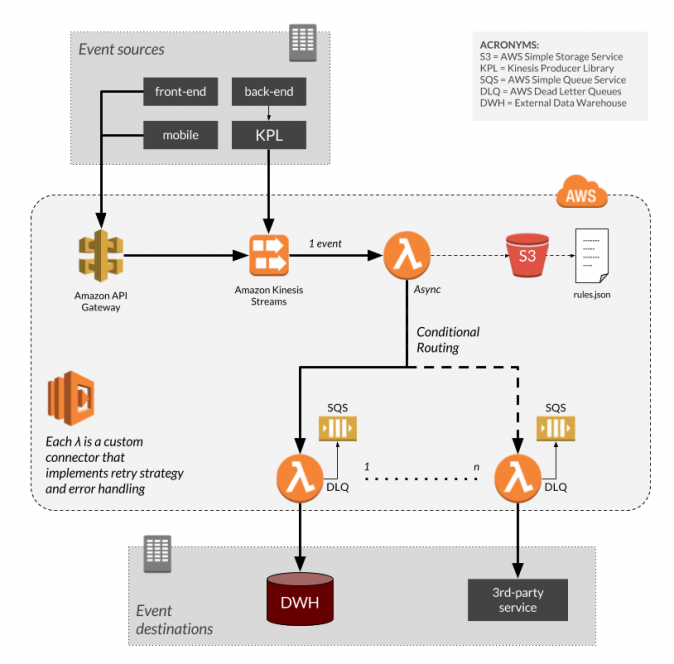

The following schema summarizes the architecture that we’ll be describing:

The use case that I will be analyzing involves the collection of data from multiple sources: backend, front-end, and mobile generated events. The architecture for data collection is designed to send events to different destinations, like our data warehouse and other third-party services (e.g., Hubspot, Mixpanel, GTM, customer.io). As a result, the architecture includes several serverless AWS cloud services, creating a basic data collection flow that can be easily extended by adding further modules as needed:

Let’s analyze the architecture in detail.

The data that we need to track is generated by the back-end server, the front-end website, and the mobile application. The back-end directly sends events to Kinesis Streams using the KPL (Kinesis Producer Library). An example of server-side generated events is a user login or a file download.

On the other hand, events originated from the front-end and from mobile are sent to Kinesis Streams through an Amazon API Gateway that exposes a rest endpoint. An example of such events is a page scroll in the homepage tracked via javascript, or a button clicked in the iOS or Android app.

First of all, why Amazon Kinesis Streams? Amazon Kinesis Streams is the ideal tool if you need to collect and process large streams of data in real time. As a result, there are typical scenarios for using Amazon Kinesis Streams:

In addition, the API Gateway can be used as the endpoint for front-end and mobile. During the configuration of Amazon Kinesis Streams, we suggest starting with a single shard and increase it only when needed.

The following python code allows you send 300 events to the configured stream. The attribute PartitionKey is a random id used to partition events among shards; in the example, we generated it using a datetime field. Finally, pay attention to payload content format: remember to always add data and name fields. You can find a ready-to-use blueprint in the AWS Lambda console.

import boto3

from time import gmtime, strftime

client = boto3.client(

service_name="kinesis",

region_name="us-east-1",

)

for i in xrange(300):

print "sending event", i, "\nresponse: ",

response = client.put_record(

StreamName="data-collection-stream",

Data='{"name":"event-%d","data":{"square":%d}}' % (i, i*i),

PartitionKey=strftime("PK-%Y%m%d-%H%M%S", gmtime()),

)

print response

AWS Lambda is a serverless cloud service by Amazon Web Services that runs your code for virtually any type of backend application. Lambdas automatically manage the underlying compute resources for you in response to events triggered from other AWS services or call it directly from the outside. Relevant AWS Lambda Functions:

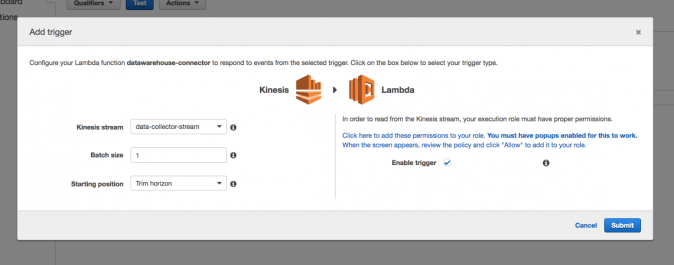

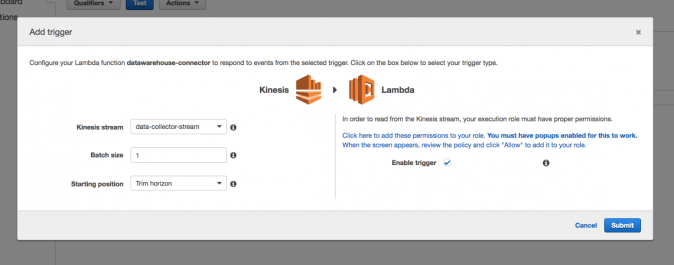

With reference to the proposed architecture, an AWS Lambda Function is first directly triggered by Kinesis Streams itself. To preserve the priority, we recommend configuring the trigger with batch size equals to 1 and starting position set to trim the horizon, as shown in the following snapshot of the AWS Lambda Function console:

This AWS Lambda Function is in charge of routing the events coming from the Kinesis Stream to several destination services. For a more cost-effective solution, we recommend implementing a conditional routing based on the same event properties. For instance, based on the event name, a set of rules can be configured to decide whether to discard or to forward an event toward a certain destination.

With this purpose, I defined a set of routing rules in a JSON file stored in an S3 bucket:

[

{

"destination_name": "mixpanel",

"destination_arn": "arn:aws:lambda:region:account-id:function:function-name:prod",

"enabled_events": [

"page_view",

"search",

"button_click",

"page_scroll",

]

},

{

"destination_name": "hubspotcrm",

"destination_arn": "arn:aws:lambda:region:account-id:function:function-name:prod",

"enabled_events": [

"login",

"logout",

"registration",

"page_view",

"search",

"email_sent",

"email_open",

]

},

{

"destination_name": "datawarehouse",

"destination_arn": "arn:aws:lambda:region:account-id:function:function-name:prod",

"enabled_events": [

"login",

"logout",

"registration",

"page_view",

"search",

"button_click",

"page_scroll",

"email_sent",

"email_open",

]

}

]

I recommend using the AWS Lambda Function environment variables to configure the name of the S3 file and bucket so that they can be updated at any time without editing the code. Each rule has a destination_arn attribute that configures where the event is to be sent if its name is included in the list of enabled_events. The AWS Lambda Function will send the event to the configured ARN (Amazon Resource Name), with each corresponding to a specific Lambda Function.

I suggest outputting events one by one to avoid data loss. In this way, the AWS Lambda Function in charge of conditional routing is sure to manage only one message at a time.

Finally, we need to implement a new AWS Lambda Function that is in charge of validating the incoming event and forwards it to each destination service (e.g., Google Analytics, Mixpanel, Hubspot, database). Such functions are asynchronously invoked by the routing Lambda (using the destination_arn).

Each AWS Lambda function implements:

In addition, a few suggestions that we found useful during the design and the development of the data collection architecture:

While each Lambda Function implements its own retry strategy, some events may not be successfully stored by the destination service (e.g., network problems, missing data, etc.).

Fortunately, Lambda allows you to implement a fallback strategy and, in the event of errors, you can discard the event and store it into a so-called Dead Letter Queue (DLQ) The DLQ can be either an SQS (Amazon Simple Queue Service) or an SNS (Amazon Simple Notification Service).

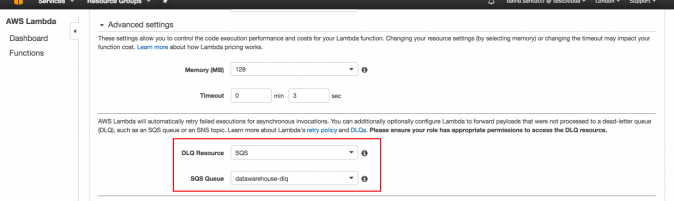

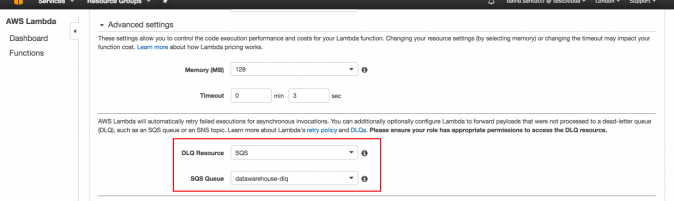

From advanced settings of Lambda (see the following snapshot) you can configure the AWS Lambda Function to forward payloads that were not processed to a dedicated Dead Letter Queue. In our example, we configured an SQS queue.

You can recover the complete list of DLQ messages using another AWS Lambda Function that is manually triggered. Remember to set a DLQ on each Lambda Function that can fail! Then you can process all of the collected events again with a custom script, like the following:

import json

import boto3

def get_events_from_sqs(

sqs_queue_name,

region_name='us-west-2',

purge_messages=False,

backup_filename='backup.jsonl',

visibility_timeout=60):

"""

Create a json backup file of all events in the SQS queue with the given 'sqs_queue_name'.

:sqs_queue_name: the name of the AWS SQS queue to be read via boto3

:region_name: the region name of the AWS SQS queue to be read via boto3

:purge_messages: True if messages must be deleted after reading, False otherwise

:backup_filename: the name of the file where to store all SQS messages

:visibility_timeout: period of time in seconds (unique consumer window)

:return: the number of processed batch of events

"""

forwarded = 0

counter = 0

sqs = boto3.resource('sqs', region_name=region_name)

dlq = sqs.get_queue_by_name(QueueName=sqs_queue_name)

with open(backup_filename, 'a') as filep:

while True:

batch_messages = dlq.receive_messages(

MessageAttributeNames=['All'],

MaxNumberOfMessages=10,

WaitTimeSeconds=20,

VisibilityTimeout=visibility_timeout,

)

if not batch_messages:

break

for msg in batch_messages:

try:

line = "{}\n".format(json.dumps({

'attributes': msg.message_attributes,

'body': msg.body,

}))

print("Line: ", line)

filep.write(line)

if purge_messages:

print('Deleting message from the queue.')

msg.delete()

forwarded += 1

except Exception as ex:

print("Error in processing message %s: %r", msg, ex)

counter += 1

print('Batch %d processed', counter)

I strongly recommend that you consider building a serverless architecture for the management of your data collection pipeline. In this post, we designed a serverless data collection pipeline using several AWS Cloud services such as AWS Lambda Functions and Amazon Kinesis Streams in order to ensure scalability and prevent data loss. The main advantage of this solution is the full control of the process and the cost, since you only pay for the compute time that you consume.

In conclusion, I would suggest a couple of very useful extensions that can be integrated into the serverless architecture: