AWS CloudWatch is a monitoring and alerting service that integrates with most AWS services like EC2 or RDS. It can monitor system performance in near real time and generate alerts based on thresholds you set.

The number of performance counters is fixed for any particular AWS service, but their thresholds are configurable. The alerts can be sent to system administrators through a number of channels.

Although most people think of CloudWatch as a bare-bones monitoring tool with a handful of counters, it’s actually more than that. CloudWatch can work as a good log management solution for companies running their workload in AWS.

By “log management”, we mean CloudWatch:

- Can store log data from multiple sources in a central location

- Enforce retention policy on those logs so they are available for a specific period

- Offers a searching facility to look inside the logs for important information

- Can generate alerts based on metrics you define on the logs

CloudWatch logs can come from a number of sources. For example:

- Logs generated by applications like Nginx, IIS or MongoDB

- Operating system logs like syslog from EC2 instances

- Logs generated by CloudTrail events

- Logs generated by Lambda functions

Some Basic Terms

Before going any further, let’s talk about two important concepts.

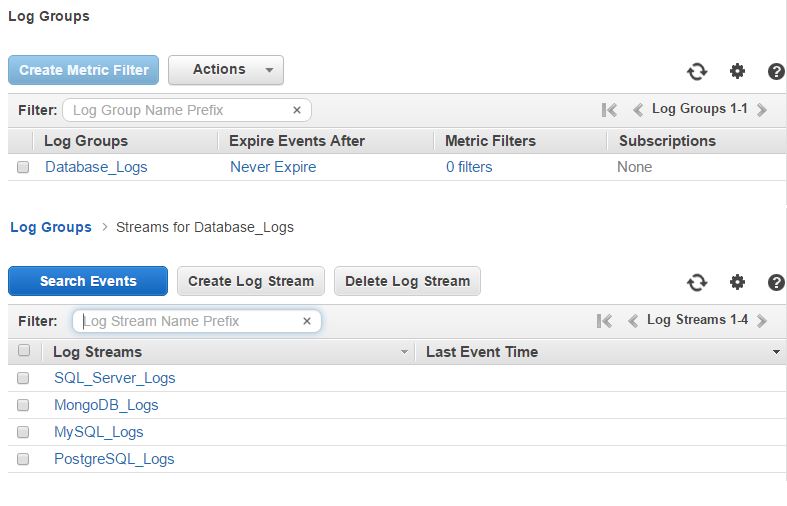

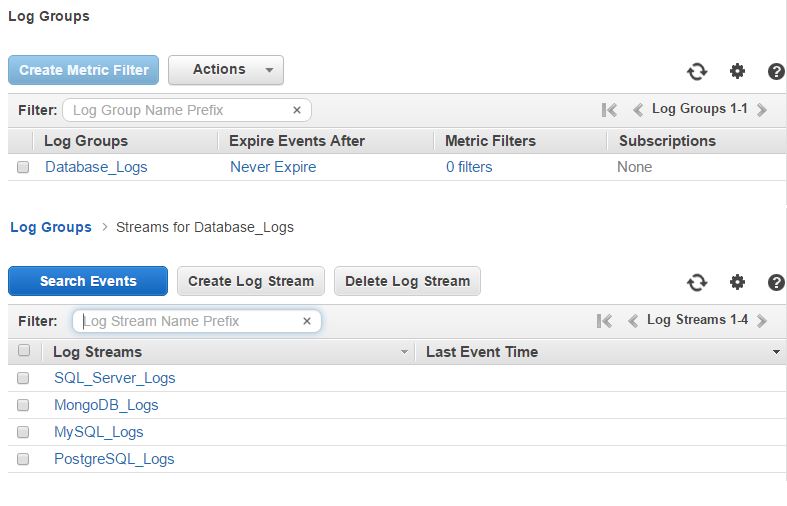

CloudWatch Logs are arranged in what’s known as Log Groups and Log Streams. Basically, a log stream represents the source of your log data. For example, Nginx error logs streaming to CloudWatch will be part of one log stream. Java logs coming from app servers will be part of another log stream, database logs would form another stream and so on. In other words, each log stream is like a channel for log data coming from a particular source.

Log groups are used to classify log streams together. A log group can have one or multiple log streams in it. Each of these streams will share the same retention policy, monitoring setting or access control permissions. For example, your “Web App” log group can have one log stream for web servers, one stream for app servers and another for database servers. You can set a retention policy of, say, two weeks for this log group, and this setting will be applied to each of the log streams.

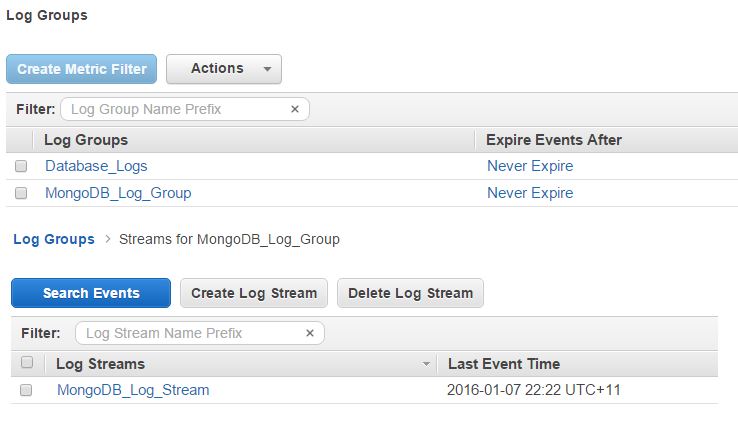

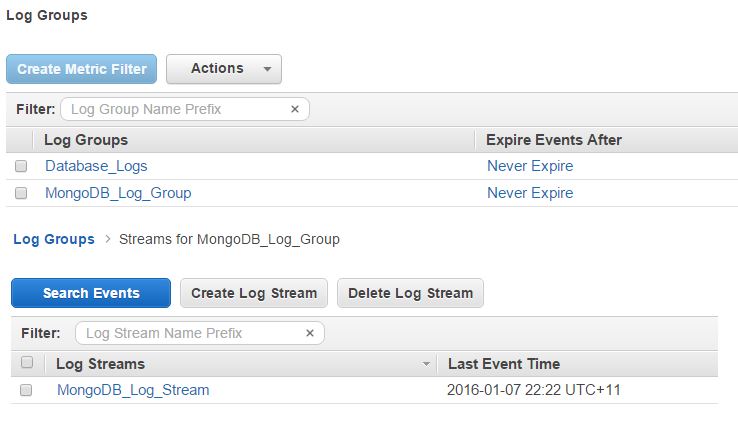

The image below shows a log group and its log streams:

Amazon EC2 and AWS CloudWatch Logs

We will start our discussion with Amazon EC2 instances. There are three ways Amazon EC2-hosted applications can send their logs to CloudWatch:

- A script file can call AWS CLI commands to push the logs. The script file can be scheduled through an operating system job like cron

- A custom-written application can push the logs using AWS CloudWatch Logs SDK or API

- AWS CloudWatch Logs Agent or EC2Config service running in the machine can push the logs

Of these three methods, the third one is the simplest. This is a typical setup for many log monitoring systems. In this case, a software agent runs as a background service in the target EC2 instance, and automatically sends logs to CloudWatch. There are two prerequisites for this to work:

- The EC2 instance needs to be able to access the AWS CloudWatch service to create log groups and log streams in it and write to the log streams

- The EC2 instance needs to know what application it should monitor and how to handle the events logged by the application (for example, the EC2 instance needs to know the name and path to the log file and the corresponding log group / log stream names)

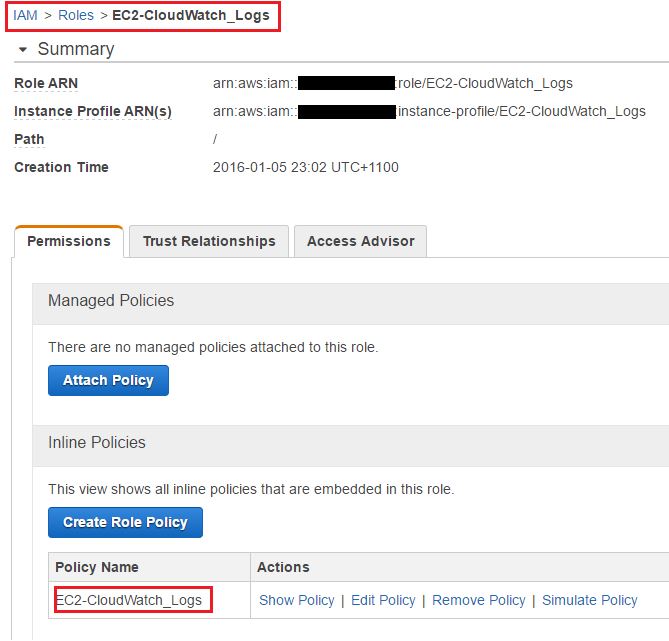

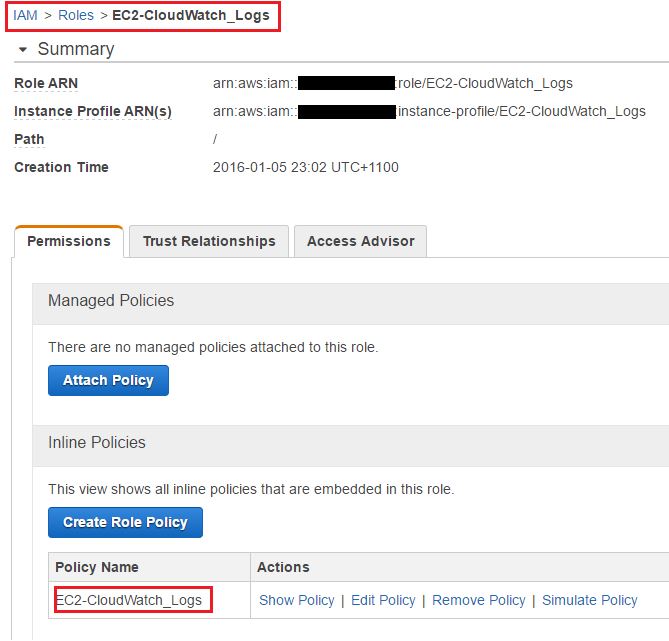

The first prerequisite is handled when an EC2 instance is either:

- Launched with an IAM role that has these privileges or

- Configured with the credentials of an AWS account that has these privileges (the account credentials are set in the agent’s configuration file)

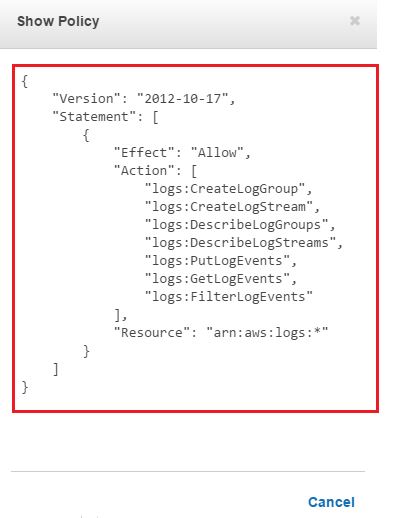

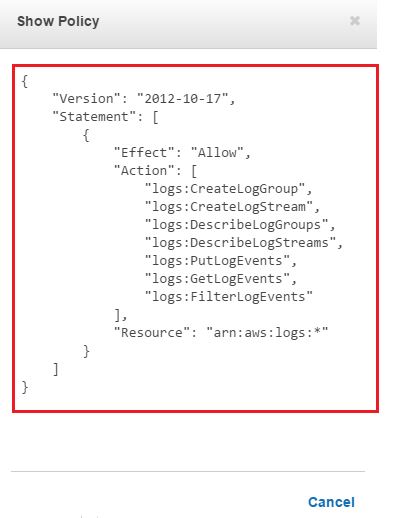

Given that you can’t attach an IAM role to an existing EC2 instance, and it’s not a good idea to leave AWS account credentials exposed in plain text configuration files, we strongly recommend launching EC2 instances with at least a “dummy” IAM role. This role can be modified later to include CloudWatch Logs privileges.In the image below, we have created one such role and assigned permissions to its policy:

Any EC2 instance assuming this role (EC2-CludWatch_Logs) will now be able to send data to CloudWatch Logs.

The second prerequisite is handled by the EC2Config service or CloudWatch Log Agent’s configuration file. The configuration details can be modified later.

In the next two sections, we will see how Linux or Windows EC2 instances can send their logs to CloudWatch. To keep things simple, we will assume both the instances were launched with the IAM role we just created.

The Linux machine will have a MongoDB instance running and the Windows box will have a SQL Server instance running. We will see how both MongoDB and SQL Server can send their logs to CloudWatch.

Sending Logs from EC2 Linux Instances

Sending application logs from Linux EC2 instances to CloudWatch requires the CloudWatch Logs Agent to be installed on the machine. The process is fairly straightforward for systems running Amazon Linux where you need to run the following command:

$ sudo yum install -y awslogsThis will install the agent through yum. Once installed, you need to modify two files:

/etc/awslogs/awscli.conf: modify this file to provide necessary AWS credentials (unless the instance was launched with an appropriate IAM role) and the region name where you want to send the log data/etc/awslogs/awslogs.conf: edit this file to specify which log files you want to be streamed to CloudWatch

Once the files have been modified, you can start the service:

$ sudo service awslogs startInstalling CloudWatch Logs Agent in mainstream Linux distros like CentOS/RedHat or Ubuntu is somewhat different. Let’s consider an EC2 instance which is running RHEL 7.2 and launched with our IAM role. Let’s assume the machine also has a vanilla installation of MongoDB 3.2. Looking at the MongoDB config file in the machine shows the default location of the log file:

$sudo cat /etc/mongod.conf | grep path $ path: /var/log/mongodb/mongod.log

If you tail the log file:

$ sudo tail -n 10 /var/log/mongodb/mongod.log

The log data will look something like this:

2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 4096 processes, 64000 files. Number of processes should be at least 32000 : 0.5 times number of files. 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] 2016-01-07T05:36:18.475-0500 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory '/var/lib/mongo/diagnostic.data' 2016-01-07T05:36:18.490-0500 I NETWORK [HostnameCanonicalizationWorker] Starting hostname canonicalization worker 2016-01-07T05:36:18.521-0500 I NETWORK [initandlisten] waiting for connections on port 27017 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 4096 processes, 64000 files. Number of processes should be at least 32000 : 0.5 times number of files. 2016-01-07T05:36:18.414-0500 I CONTROL [initandlisten] 2016-01-07T05:36:18.475-0500 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory '/var/lib/mongo/diagnostic.data' 2016-01-07T05:36:18.490-0500 I NETWORK [HostnameCanonicalizationWorker] Starting hostname canonicalization worker 2016-01-07T05:36:18.521-0500 I NETWORK [initandlisten] waiting for connections on port 27017

It’s the content of this file you want to send to CloudWatch.

As mentioned before, installing the agent in RHEL/CentOS or Ubuntu is slightly different than Amazon Linux. Here, you will have to download a Python script from AWS and run that as the installer.

Step 1. Run the following command as the root or sudo user:

$ sudo wget http://s3.amazonaws.com/aws-cloudwatch/downloads/latest/awslogs-agent-setup.py

This will download the script in the current directory.

Step 2. Next, change the script’s file mode for execution:

$ sudo chmod +x awslogs-agent-setup.py

Step 3. Finally, run the Python script:

$ sudo python ./awslogs-agent-setup.py --region=<EC2 instance’s region name>

This will start the installer in a Wizard like fashion. It will install pip, then download the latest CloudWatch logs agent and prompt you for different field values:

Launching interactive setup of CloudWatch Logs agent ...Step 1 of 5: Installing pip ...DONEStep 2 of 5: Downloading the latest CloudWatch Logs agent bits ... DONEStep 3 of 5: Configuring AWS CLI ...AWS Access Key ID [None]:

Press Enter to skip this prompt if you launched the instance with an IAM role with sufficient permissions.

AWS Secret Access Key [None]:

Press Enter again to skip this prompt if your instance was launched with an IAM role.

Default region name [<region name>]:

You can skip this prompt as well if you specified the region name when you called the Python script.

Default output format [None]:

Press Enter again to skip this prompt.

Step 4 of 5: Configuring the CloudWatch Logs Agent ... Path of log file to upload [/var/log/messages]:

Specify the path and filename of the MongoDB log. For a default installation, it would be /var/log/mongodb/mongod.log

Destination Log Group name [/var/log/mongodb/mongod.log]:

Instead of accepting the default log group name suggested, you can choose to enter a meaningful name. We chose “MongoDB_Log_Group” as the log group name.

Choose Log Stream name:

1. Use EC2 instance id.

2. Use hostnam.

3. Custom.

Enter choice [1]:Next, enter 3 in the prompt to choose a custom name for the log stream.

In the following prompt specify the log stream name. We used “MongoDB_Log_Stream” as the stream name.

Enter Log Stream name [None]:In the next prompt, enter 4 to choose a custom time-stamp format.

Choose Log Event timestamp format: 1. %b %d %H:%M:%S (Dec 31 23:59:59) 2. %d/%b/%Y:%H:%M:%S (10/Oct/2000:13:55:36) 3. %Y-%m-%d %H:%M:%S (2008-09-08 11:52:54) 4. Custom Enter choice [1]:

For MongoDB logs, the time stamp format is ISO8601-local.

Enter customer timestamp format [None]: iso8601-local

Choose initial position of upload: 1. From start of file. 2. From end of file. Enter choice [1]:

Choose the first option (1) because you want the whole log file to be loaded first.

Finally, the wizard asks if you want to configure more log files.

More log files to configure? [Y]:

By entering “y”, you can choose to send multiple log files from one server to different log groups and log streams. For this particular exercise we entered “N”. The wizard would then finish with a message like this:

– Configuration file successfully saved at: /var/awslogs/etc/awslogs.conf

– You can begin accessing new log events after a few moments at: https://console.aws.amazon.com/cloudwatch/home?region=<region-name>#logs:

– You can use ‘sudo service awslogs start|stop|status|restart’ to control the daemon.

– To see diagnostic information for the CloudWatch Logs Agent, see /var/log/awslogs.log

– You can rerun interactive setup using ‘sudo python ./awslogs-agent-setup.py –region <region-name> –only-generate-config’

– You can rerun interactive setup using ‘sudo python ./awslogs-agent-setup.py –region <region-name> –only-generate-config’

From the final messages in the wizard, you know where the config file is created (

/var/awslogs/etc/awslogs.conf

). If you look into this file, you will find the options chosen in the wizard have been added at the end of the file. If you think about automating the installation process, you can first create this file with appropriate details and then call the Python script. The command will be like this:

$ sudo python ./awslogs-agent-setup.py --region=<EC2 instance’s region name> --configfile=/var/awslogs/etc/awslogs.conf

As a final step, restart the Agent service:

$ sudo systemctl restart awslogs.service

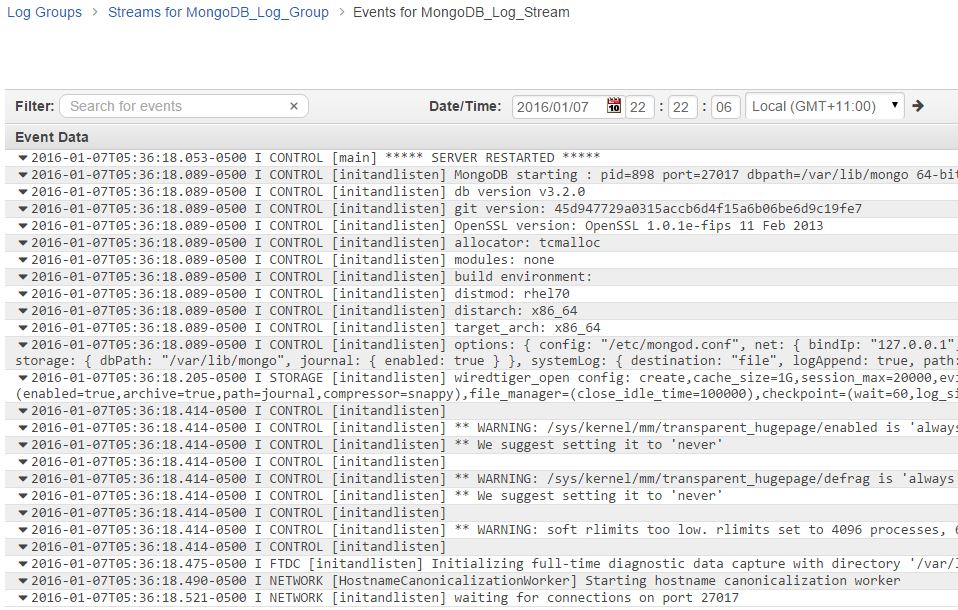

Looking in the CloudWatch Logs console will now show the log group and log stream created:

Browsing the log stream will show the log file has been copied:

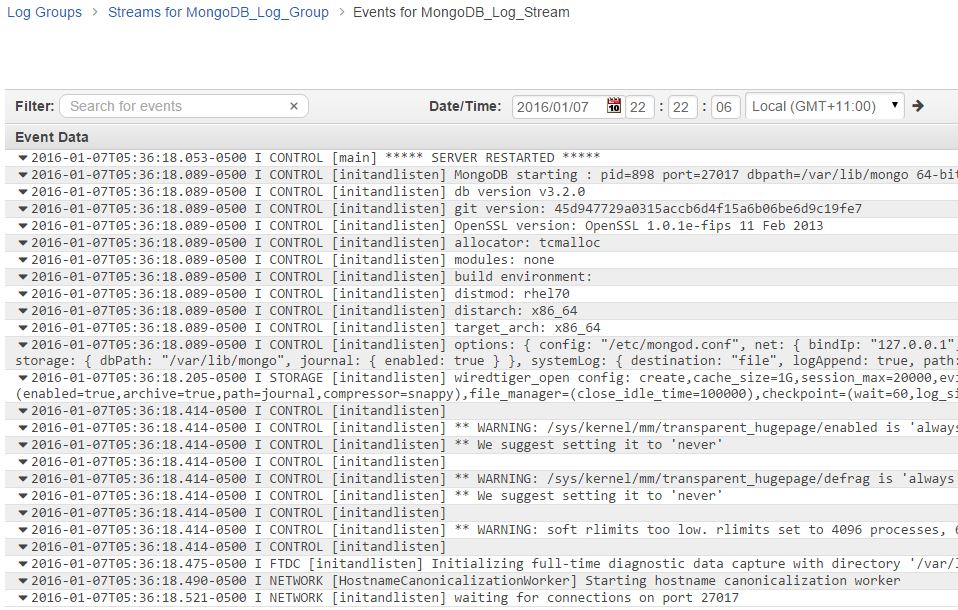

To test, you can connect to the MongoDB instance and run some commands to create a database and add a collection. The commands and their output are shown below.

use mytestdb

switched to db mytestdb

db.createCollection('mytestcol')

{ "ok" : 1 }

show dbs

local 0.000GB

mytestdb 0.000GB

exit

bye

The connection would be recorded in the MongoDB log file and flow on to CloudWatch log stream:

![]()

![]()

Conclusion

We have now made our basic introduction to AWS CloudWatch Logs. As you just saw, it’s really simple to make EC2 Linux instances send their logs to CloudWatch. In the next part of this three-part series, we will see how some other sources can also send their log data to CloudWatch. Feel free to send us your comments or question on the post if you like. By sharing our experience, we will all continue learning. If you want to try using some of what you just learned you can work on one of the Cloud Academy hands-on labs: Introduction to CloudWatch. There is a 7-day free trial.

If you want to know more about performance monitoring with AWS CloudWatch you can read this article from Nitheesh Poojary, also published in Cloud Academy blog.

If you’re interested to learn more about Amazon CloudWatch, the Cloud Academy’s Getting Started to CloudWatch Course is your go-to course. Watch this short video taken from the course.

If you’re interested to learn more about Amazon CloudWatch, the Cloud Academy’s Getting Started to CloudWatch Course is your go-to course. Watch this short video taken from the course.