Pivotal Cloud Foundry deployments can be complicated. Learn how to properly create and restore from backups.

Pivotal Cloud Foundry (PCF) is an open source platform based on Cloud Foundry and offered as a collaboration between Pivotal, EMC, and GE. Pivotal Cloud Foundry runs on almost all popular cloud infrastructures, including VMWare, AWS, and OpenStack. PCF as a platform is dynamic, developer friendly, and features full-lifecycle support.

Organizations implementing Pivotal Cloud Foundry as their cloud platform free themselves from managing application infrastructure. When integrating freely available third-party tools and services, they can also achieve high-availability, auto-scaling, dynamic routing, multi-lingual support, and log analysis.

Pivotal Cloud Foundry performs exceedingly well when intelligently designed and maintained, but there are still some time-consuming tasks that demand an admin’s attention. One such operational task is ensuring that installation settings and essential internal databases are regularly backed up. Pivotal recommends that you back up your installation settings by exporting them at regular intervals (weekly, bi-weekly, monthly, etc). We’re going to discuss designing an effective and reliable back up process…and how to apply an archive when you need to restore your installation.

Note: According to Pivotal Cloud Foundry documentation, exporting your installation only backs up your installation settings. It does not back up your VMs or any external MySQL databases that you might have configured on the Ops Manager Director Config page.

Pivotal Cloud Foundry: prerequisites

Before jumping in, it’s a good idea to make sure that you’ve covered all the prerequisites you’ll need to make Pivotal Cloud Foundry happy. You’ll need:

- Sufficient space in your workstation to store backup data from Pivotal.

- Administration credentials of the existing Ops Manager to log in to console.

- Communication coordinates of users who may be affected by this upgrade.

- Pivotal Cloud Foundry support details if you have subscribed.

Pivotal Cloud Foundry: a backup strategy

Backing up a Pivotal installation is critical for the operation and availability of your Pivotal Cloud Foundry data center. Backing up Pivotal Cloud Foundry data centers is like creating restore points on a Windows machine. In the event of a crash or the failure of an upgrade process, you can restore your back up settings to fall back to an earlier, functional image. Here’s what you’ll need to do:

- Export installation settings from the Ops Manager console.

- Backup critical Pivotal databases: Cloud Controller Database (CCDB), User Account and Authentication (UAA) database, Pivotal MySQL database, and the Apps Manager Database (Console DB in prior to 1.5.x versions).

- Backup NFS Server data.

- Identify the target archive location for your backups (ideally, it should be in a centralized location that’s part of an NFS share so that other team members can also access it).

- Define backup frequency (daily, weekly, bi-weekly, monthly, etc).

- Define your archival policy.

- Automate the backup process as much as possible to reduce human error.

- Define a notification process to inform all affected users and teams of pending process events.

- Define a restore process (and document it well).

Pre-backup activities

To make sure that your system is ready for the process, there are some important details that will need taking care of in the pre-backup stage:

- Make sure OpsManager Director is healthy by going to the status tab. It should not display in RED as shown below:

- Make sure all your current VMs are healthy by checking the status tab in all individual tiles.

- Ensure that you have enough available space in your NFS share for backing up the files.

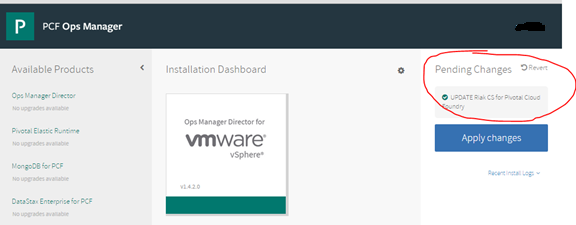

- Ensure there are no pending changes (see the diagram below for examples). If there are any pending changes, confirm whether or not the changes should be applied. Pending changes will not be backed-up and, as we all know, not backing something up is a sure way to guarantee future disasters.

- Make sure that all of the components of your VMs (like MySQL, CCDB, and NFS Server) are accessible and available before initiating a backup.

Backup Procedure

Backup/Export Installation Settings

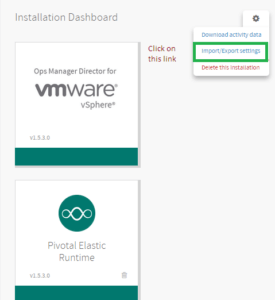

- In the dashboard page:

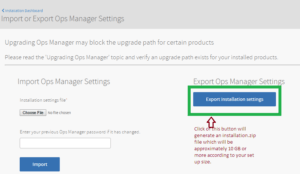

- Export the installation settings here:

- Save the installations.zip file to your desired location. As the file size can be several GBs, make sure you have enough space in your path.

Backup Cloud Controller Database (CCDB)

Pivotal Cloud Foundry’s Cloud Controller Database maintains a database with records of orgs, spaces, apps, services, service instances, user roles, etc. Backing up this database is critical if you want to protect your existing settings (and you DO want to protect your existing settings).

- From the Ops Manager Director, copy the IP address of the CCDB. You can find it by clicking on Dashboard-> Ops Manager Director -> Status.

- Secure Director credentials from the Credentials tab.

- From a command line, target the BOSH Director using the IP address and credentials that you have recorded.

$bosh target <IP_OF_YOUR_OPS_MANAGER_DIRECTOR> $bosh login

Your username: director Enter password: Logged in as `director'

- Run the following command (I’m using the path “/pcf-backup”):

$bosh deployments >> /pcf-backup/deployments_09_20_2015.txt

- Run the following command from within /pcf-backup/poc-backup:

$bosh download manifest DEPLOYMENT-NAME LOCAL-SAVE-NAME $bosh download manifest cf-1234xyzabcd1234 cf-backup-09_20_2015.yml

- The deployment name will be taken from the first entry starting with “cf” in the name column from the .txt file.

- Run bosh deployment <DEPLOYMENT-MANIFEST> to set your deployment.

$bosh deployment cf-backup-09_20_2015.yml

- Run bosh vms <DEPLOYMENT-NAME> to view all the VMs in your selected deployment.

$bosh vms cf-1234xyzabcd1234

- Run bosh -d <DEPLOYMENT-MANIFEST> stop <SELECTED-VM> for each Cloud Controller VM.

$bosh -d cf-backup-09_20_2015.yml stop cloud_controller-partition-cdabcd1234b253f40 $bosh -d cf-backup-09_20_2015.yml stop cloud_controller_worker-partition- cdabcd1234b253f40

- Note the details from the cf-backup-09_20_2015.yml file:

ccdb: address: 1.2.99.16 port: 2544 db_scheme: postgres

- Select Cloud Controller VM Credentials from Dashboard -> Elastic Runtime-> Credentials.

vm Credentials vcap / xyz1234567989pqr

- SSH into ccdb with your Cloud Controller Database VM Credentials.

$ssh vcap@<IP_ADDRESS_OF_CCDB>

- Run the following to find the locally installed psql client on the CCDB VM:

$find /var/vcap | grep 'bin/psql'

Your output should look something like this:

$/var/vcap/data/packages/postgres/b63fe0176a93609bd4ba44751ea490a3ee0f646c.1-9eea4f5b6de7b1d8fff28b94456f61e8e22740ce/bin/psql

- The next command will ask for the admin credentials, which can be found in the .yml file (cf-backup-09_20_2015.yml):

$/var/vcap/data/packages/postgres/<random-string>/bin/pg_dump -h 1.2.99.16 -U admin -p 2544 ccdb > ccdb_09_20_2015.sql

- Exit from vcap. Run the following commands from within the backup path on your local workstation:

#scp vcap@1.2.99.16:/home/vcap/ccdb_09_20_2015.sql /pcf-backup

This will complete the CCDB backup process.

Backup your User Account and Authentication Database (UAADB)

- Get the UAA Database VM credentials from the Elastic Runtime credential page:

vm Credentials vcap / xxxxxxxxxxxx Credentials root / xxxxxxxxxxxxxxxxxx

- Retrieve your UAADB address from the cf-backup-09_20_2015.yml file and then log in to UAADB:

$ssh vcap@1.2.90.17

- Run:

$find /var/vcap | grep 'bin/psql'

- Based on the output of the above “find” operation, run the following:

#/var/vcap/data/packages/postgres/<random-string>/bin/pg_dump -h 1.2.90.17 -U root -p 2544 uaa > uaa_09_20_2015.sql

- Provide the root password recorded above. Once that’s done, exit from vcap and, from the workstation backup path, copy the files from UAADB VM:

# scp vcap@1.2.90.17:/home/vcap/uaa_09_20_2015.sql /pcf-backup

This completes the UAADB backup process.

Backup your Console Database

The Console Database is referred to as the Apps Manager Database in Elastic Runtime 1.5.

- Retrieve your Apps Manager Database credentials from the Elastic Runtime credentials page:

Vm Credentials vcap / xxxxxxxxxxxxx Credentials root / xxxxxxxxxxxxxxxxxxx

- Get the Apps Manager VM address from cf-backup-09_20_2015.yml file. Login to the Apps Manager Database VM.

$ssh vcap@1.2.90.18

- Run these two commands (using the output from the find operation as above):

$find /var/vcap | grep 'bin/psql' $/var/vcap/data/packages/postgres/<random-string>/bin/pg_dump -h 1.2.90.18 -U root -p 2544 console > console_09_20_2015.sql

- Exit from the DB Console of your VM.

- Once it is completed, exit from vcap and, from the workstation backup path, copy files from Console DB VM:

$scp vcap@1.2.90.18:/home/vcap/console_09_20_2015.sql /pcf-backup

This completes the Console Database backup process.

Backup your NFS Server

- Get the NFS Server VM credentials from Elastic Runtime credentials page.

- Get the nfs_server address from the from cf-backup-09_20_2015.yml file and login via SSH to the VM:

$ssh vcap@1.2.90.15

- Execute (this will take some time to complete):

$tar cz shared > nfs_09_20_2015.tar.gz

- Exit from the NFS Server VM and copy the file to backup path:

$scp vcap@1.2.90.15:/var/vcap/store/nfs_09_20_2015.tar.gz /pcf-backup

This completes the NFS Server backup process.

Backup your MySQL Database:

- Get the MySQL VM deployment manifest from the deployments_09_20_2015.txt file retrieved earlier and execute:

$bosh download manifest p-mysql-abcd1234f2ad3752 mysql_09_20_2015.yml

- Create a MySQL dump:

$mysqldump -u root -p -h 1.2.90.20 --all-databases > user_databases_09_20_2015.sql

- The hostname and password should match those retrieved from manifest file-mysql_09_20_2015.yml

This completes the MySQL DB backup process.

Post Backup Activities

- Start the cloud-controller and cloud-controller-worker VM.

$bosh -d cf-backup-09_20_2015.yml start cloud_controller-partition-cdabcd1234b253f40 $bosh -d cf-backup-09_20_2015.yml start cloud_controller_worker-partition- cdabcd1234b253f40

- Confirm that the cloud controller is up and running.

Pivotal Cloud Foundry: the restore process

Restoring a Pivotal Cloud Foundry deployment requires that you reinstall your installation settings restoration and key system databases. Or, in other words, everything we backed up in the previous operations. You’ll need to follow these steps:

- Import the Installation Settings zip file (i.e., installation.zip) into the PCF Ops Manager > Settings.

- Once the installation restores process is complete, check the status of all the Jobs in the Elastic Runtime > Status tab.

- If all the jobs are healthy, then restore the backed up DBs.

We’ll use the UAADB as an example. The rest will follow the same process.

- Stop UAA:

$ bosh stop <uaa job>

- (Lookup and then) Drop all the tables in UAADB.

- Login to the UAADB VM via the vcap user using the password from your runtime’s credential’s page.

$ssh vcap@[uaadb vm ip]

- Login to the UAADB client on the VM and plsql client:

$/var/vcap/data/packages/ /postgres/<random-string> /bin/psql -U vcap -p 2544 uaa

- Run these commands to drop the tables:

drop schema public cascade; create schema public;

- Use the same password and IP address that was used to back up the UAA database, and restore the UAA database with the following commands:

$scp uaa.sql vcap@[uaadb vm IP]: #UAADB server $/var/vcap/data/packages/postgres/<random-string>/bin/psql -U vcap -p 2544 uaa < uaa.sql

- Restart UAA Job:

$bosh start <uaa job>

- To restore the backed up Pivotal Cloud Foundry NFS Server, simply copy the contents of your backup to /var/vcap/store on the “NFS Server” VM.

A Pivotal Cloud Foundry backup process can be scheduled (and scripted) to create restore points for your installation. You could also use the settings file backups to launch a new installation in a different availability zone or even on a different platform. PCF has provided excellent documentation on both the backup and restore process.

Thoughts? Add your comments below.