Implementing Lifecycle Policies and Versioning will minimise data loss.

Following on from last week’s look at Security within S3 I want to continue looking at this service. This week I’ll explain how implementing Lifecycle Policies and Versioning can help you minimise data loss. After reading, I hope you’ll better understand ways of retaining and securing your most critical data.

We will look at ways of preventing accidental object deletion, and finally I’ll review encryption of your data across your S3 objects.

Lifecycle Policies

Implementing Lifecycle policies within S3 is a great way of ensuring your data is managed safely (without experiencing unnecessary costs) and that your data is cleanly deleted once it is no longer required. Lifecycle policies allow you to automatically review objects within your S3 Buckets and have them moved to Glacier or have the objects deleted from S3. You may want to do this for security, legislative compliance, internal policy compliance, or general housekeeping.

Implementing good lifecycle policies will help you increase your data security. Good lifecycle policies can ensure that sensitive information is not retained for periods longer than necessary. Thes policies can easily archive data into AWS Glacier behind additional security features when needed. Glacier is often used as a ‘cold storage’ solution for information that needs to be retained but rarely accessed and offers a significantly cheaper storage service than S3.

Lifecycle policies are implemented at the Bucket level and you can have up to 1000 policies per Bucket. Different policies can be set up within the same Bucket affecting different objects through the use of object ‘prefixes’. The policies are checked and run automatically — no manual start required. I have an important note on this automation, be aware that lifecycle policies may not immediately run once you have set them up because the policy may need to propagate across the AWS S3 Service. Very important when starting to verify your automation is live.

The policies can be set up and implemented either via the AWS Console or S3 API. Within this article I will show you how to set up a lifecycle policy using the AWS console. Cloud Academy has a short “overview” blog post comparing Amazon S3 vs Amazon Glacier that was published early last year and I think is worth a read.

Setting up a Lifecycle Policy in S3

- Log into your AWS Console and select ‘S3’

- Navigate to your Bucket where you want to implement the Lifecycle Policy

- Click on ‘Properties’ and then ‘Lifecycle’

From here you can begin adding the rules that will make up your policy. As you can see above there are no rules set up as yet.

- Click ‘Add rule’

• From here you can either set up a policy for the ‘Whole Bucket’ or for prefixed objects. When using prefixes, you can set up multiple policies within the same Bucket. You can set a prefix to affect a subfolder within the Bucket, or a specific object, which will provide you a more refined set of policies. For this example, I will select ‘

For this example, I will select ‘Whole Bucket’ and click ‘Configure Rule’.

- I now have 3 options to choose from:

o Move object to the Standard – IA class within S3. Standard IA is ‘Infrequent Access’. (More information on this class can be found in this Amazon article.

o Archive the object to Glacier

o Permanently Delete the objects - For the purpose of this example I will select ‘Permanently Delete’. For Security reasons, I may want this data removed after 2 weeks from the objects creation date, so I will enter ‘14’ days and select ‘Review’.

- Give the rule a name and review your summary. If satisfied, click ‘Create and Activate Rule’

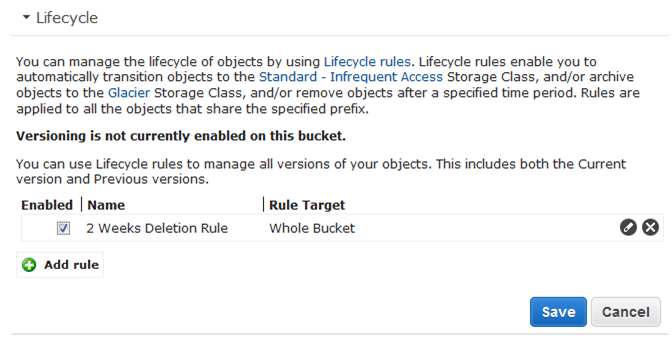

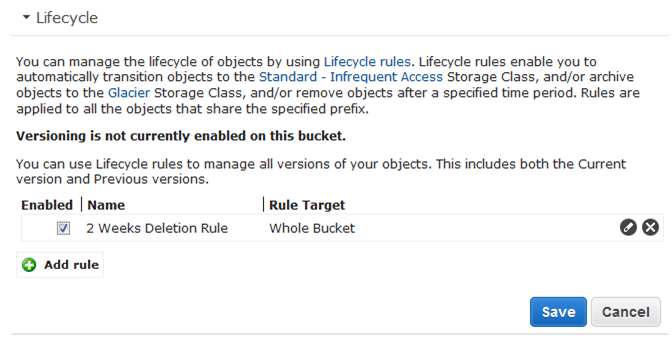

The Lifecycle section of your Bucket will now show you the new rule that you just created.

My policy will now adopt this policy for all objects within the Bucket and enforce the rules. If I have any objects within this Bucket older than 14 days, they will be deleted as soon as the policy has propagated. If I created a new object in this bucket today, it will automatically be deleted in 14 days.

This measure ensures that I am not saving confidential (or sensitive) data unnecessarily. It also allows me to reduce costs by automatically removing unnecessary data from S3 for me. This is a win-win.

If I had chosen to archive my data into AWS Glacier for archival purposes, I could have taken advantage of cheap storage ($0.01 per Gig) compared to S3. Doing this would allow me to maintain tight security through the use of IAM user policies and Glacier Vaults Access Policies which allow or deny access requests for different users. Glacier also allows for WORM compliance (Write Once Read Many) through the use of a Vault Lock Policy. This option essentially freezes data and prevents any future changes.

Amazon has good information on Vault Lock and provides more detailed info on Vault Policies for anyone who wants to dive a bit deeper.

Lifecycle policies help manage and automate the life of your objects within S3, preventing you from leaving data unnecessarily available. They make it possible to select cheaper storage options if your data needs to be retained, while at the same time, adopting additional security control from Glacier.

S3 Versioning

S3 Versioning, as the name implies, allows you to “version control” objects within your Bucket. This allows you to recover from unintended user changes and actions (including deletions) that might occurred through misuse or corruption. Enabling Versioning on the bucket will keep multiple copies of the object. Each time the object changes, a new version of that object is created and acts as the new current ‘version’.

One thing to be wary of with Versioning is the additional storage costs applied in S3. Storing multiple copies of the same object will use additional space and increase your storage costs.

Once Versioning on a Bucket is enabled, it can’t be disabled – only suspended.

Setting up Versioning on an S3 Bucket

- Log into your AWS Console and select ‘S3’

- Navigate to your Bucket where you want to implement Versioning

- Click on ‘Properties’ and then ‘Versioning’

- Click ‘Enable Versioning’

- Click ‘OK’ to the confirmation message

- Versioning is now enabled on your Bucket. You will notice that you can now only ‘Suspend’ Versioning

From now on, when you make changes to your objects within the Bucket a new version of that file will exist.

Displaying Different Versions

Continuing with the example above where activation of Versioning was enabled on the cloud-academy Bucket, I then modified object ‘Cloud-1.rtf’.

This object should now have 2 versions, the original version and the new modified version.

To see different versions of an object within your bucket follow the steps below:

- From within the Bucket, you will notice you now have 2 new buttons at the top of the screen under ‘Versions: Hide & Show’

- Simply click on ‘Show’ to view the different versions of all your objects within the Bucket. As you can see from below, a different Version has been created following my update to the file displaying the date and time of the update.

If you enable Versioning on a Bucket that already has objects in it, the Version ID of those objects will be valued as ‘null’. They will only receive a new Version ID when they are updated for the 1st time. However, any new objects uploaded after Versioning has been enabled will receive a Version ID immediately.

From here I can choose to either delete the new Version and rollback to the previous version or I can open either Version of the document. When ‘deleting’ the version it will still remain as only the Bucket Owner can permanently delete a version. This allows you to recover an accidentally deleted version.

If you Click ‘Hide’ the S3 Bucket will then only show the latest version of the documents within the bucket.

Multi-Factor Authentication (MFA) Delete

As an additional layer of security for sensitive data, you can implement a Bucket Policy that requires you to have another layer of authentication via an authentication device which typically displays a random 6 digit number to be used in conjunction with your AWS Security Credentials. This additional layer of security is great if for some reason your AWS credentials are ever breached. If this happens, any hacker would still require your associated MFA Device to gain access. For details on implementing this level of security on your Bucket, Amazon has a solid article.

S3 Encryption

Data protection is a hot topic with the Cloud industry and any service that allows for encryption of data attracts attention. S3 allows you the ability of encrypting data both at rest, and in transit.

To encrypt data in transit, you can use Secure Sockets Layer (SSL) and Client Side Encryption (CSE). Protecting your data at rest should be done with Client Side Encryption (CSE) and Server Side Encryption (SSE).

Client Side Encryption

Client Side Encryption allows you to encrypt the data locally before it is sent to AWS S3 service. Likewise, decryption happens locally on the client side.

You have 2 options to implement CSE:

Option 1, use a Client Side master key.

Option 2, use an AWS KMS managed customer master key.

The Client Side master key works in conjunction with different SDKs, Java, .NET and Ruby. It works by supplying a master key that encrypts a random data key for each S3 object you upload. The data key encrypts the data, and the master key encrypts the data key. The client then uploads (PUT) the data and the encrypted data key to S3 with modified metadata and description information. When the object is downloaded (GET), the master key verifies which master to use to decrypt the object using the metadata and description information.

If you lose your master key, then you will not be able to decrypt your data. Don’t let this happen to you.

AWS KMS Managed Customer Master Key

This method of CSE uses an addition AWS service called the Key Management Service (KMS) to manage the encryption keys instead of relying on the .SDK client to do so. You only need your CMK ID (Customer Master Key ID) and the rest of the encryption process is handled by the client. A request for encryption is sent to KMS, where the KMS service will issue 2 different versions of a data encryption key for your object. One version is plain text that encrypts your data and the second version is a cipher blob of the same key that is uploaded (PUT) with your object within the metadata.

When performing a GET request to download your object, the object is downloaded along with cipher blog encryption key from the metadata. This key is then sent back to KMS to return the same key but in plain text to allow the client to decrypt the object data.

Amazon provides detailed information on KMS (Key Management Service) so you can review before making and choices.

Server Side Encryption (SSE)

Server Side Encryption offers encryption for data objects at rest within S3 using 256-bit AES encryption (which is sometimes referred to as AES-256). One benefit of SSE is that AWS allows the whole encryption method to be managed by AWS if you choose. This option requires no setup by the end user other than specifying if you want the object to use SSE. You don’t need to manage any master keys if you don’t want to as you must with Client Side Encryption.

I say ‘if you don’t want to’ as there are 3 options for SSE. One option is SSE-S3, which allows AWS to manage all the encryption for you including management of the keys. This can be activated within the AWS Console for each object.

Setting SSE for an object within the AWS Console

- Select the S3 Service

- Navigate to the Bucket where you are uploading your object

- Select ‘Upload’

- Add you files to upload and select ‘Set Details’

- Tick the box labelled ‘Use Server Side Encryption’

- Select the radio button titled ‘Use the Amazon S3 service master key’

- Select Start Upload

This object will then be uploaded with SSE enabled.

Once it his uploaded you can check the property details of the object and select the ‘Details’ Tab to verify this:

You can also specify SSE when using the REST API.

The other 2 options allow you to manage Keys and are known as SSE-KMS and SSE-C. Additional information on these encryption methods can be found on the AWS documentation pages.

5 key elements we have learned this week:

- Lifecycle policies are a great way to automatically managed your objects that can be used from a Security, legislative compliance, internal policy compliance, or general housekeeping perspective

- Lifecycle policies will assist with cost optimisation

- Versioning in S3 is a great way to prevent accidental deletion or corruption of data by storing all versions of documents that are modified

- Versioning can be suspended on a Bucket once enabled but not disabled

- Encryption can be enabled on Objects within S3 initiated from either the Client or Server perspective to ensure the data is not compromised

Next week I shall be moving away from S3 and will provide an overview of the Key Management Service (KMS).

Thank you for taking the time to read my article. If you have any feedback please leave a comment below.

Cloud Academy has an intermediate course on Automated data management with EBS, S3 and Glacier. The course has 7 parts and inclueds about 45 minutes of video. If you are curious and want to dive deeper, Cloud Academy has a 7-day free trial so you could easily plow through this course before making a decision about committing any money.

I welcome your interaction and hope users find ways of sharing how they have used some of these options in their working lives.