Amazon S3 is the most common storage options for many organizations, being object storage it is used for a wide variety of data types, from the smallest objects to huge datasets. All in all, Amazon S3 is a great service to store a wide scope of data types in a highly available and resilient environment. Your S3 objects are likely being read and accessed by your applications, other AWS services, and end users, but is it optimized for its best performance? This post will discuss some of the mechanisms and techniques that you can apply to ensure you are getting the most optimal performance when using Amazon S3.

How to optimize Amazon S3 performance: Four best practices

1. TCP Window Scaling

This is a method used which enables you to enhance your network throughput performance by modifying the header within the TCP packet using a window scale which allows you to send data in a single segment larger than the default 64KB. This isn’t something specific that you can only do with Amazon S3, this is something that operates at the protocol level and so you can perform window scaling on your client when connecting to any other server using this protocol. More information on this can be found in RFC-1323

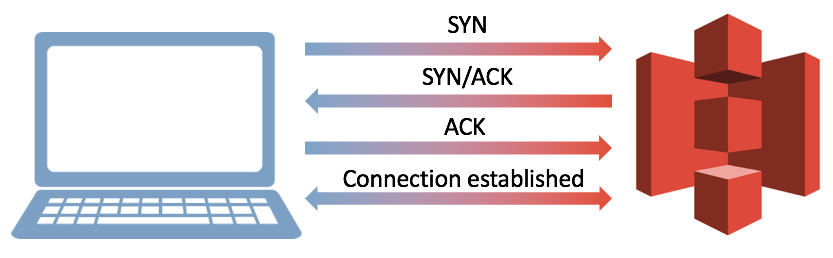

When TCP establishes a connection between a source and destination, a 3-way handshake takes place which originates from the source (client). So logically when looking at this from an S3 perspective, your client might need to upload an object to S3. Before this can happen a connection to the S3 servers needs to be created. The client will send a TCP packet with a specified TCP window scale factor in the header, this initial TCP request is known as a SYN request, part 1 of the 3-way handshake. S3 will receive this request and respond with a SYN/ACK message back to the client with it’s supported window scale factor, this is part 2. Part 3 then involved an ACK message back to the S3 server acknowledging the response. On completion of this 3 way handshake, a connection is then established and data can be sent between the client and S3.

By increasing the window size with a scale factor (window scaling) it allows you to send larger quantities of data in a single segment and therefore allowing you to send more data at a quicker rate.

2. TCP Selective Acknowledgement (SACK)

Sometimes multiple packets can be lost when using TCP and understanding which packets have been lost can be difficult to ascertain within a TCP window. As a result, sometimes all of the packets can be resent, but some of these packets may have already been received by the receiver and so this is ineffective. By using TCP selective acknowledgment (SACK), it helps performance by notifying the sender of only failed packets within that window allowing the sender to simple resend only failed packets.

Again, the request for using SACK has to be initiated by the sender (the source client) within the connection establishment during the SYN phase of the handshake. This option is known as SACK-permitted. More information on how to use and implement SACK can be found within RFC-2018.

3. Scaling S3 Request Rates

On top of TCP Scaling and TCP SACK communications, S3 itself is already highly optimized for a very high request throughput. In July 2018, AWS made a significant change to these request rates as per the following AWS S3 announcement. Prior to this announcement, AWS recommended that you randomized prefixes within your bucket to help optimize performance, this is no longer required. You can now achieve exponential growth of request rate performance by using multiple prefixes within your bucket.

You are now able to achieve 3,500 PUT/POST/DELETE request per second along with 5,500 GET requests. These limitations are based on a single prefix, however, there are no limitations of the number of prefixes that can be used within an S3 bucket. As a result, if you had 20 prefixes you could reach 70,000 PUT/POST/DELETE and 110,000 GET requests per second within the same bucket.

S3 storage operates across a flat structure meaning that there is no hierarchical level of folder structures, you simply have a bucket and ALL objects are stored in a flat address space within that bucket. You are able to create folders and store objects within that folder, but these are not hierarchical, they are simply prefixes to the object which help make the object unique. For example, if you have the following 3 data objects within a single bucket:

Presentation/Meeting.ppt

Project/Plan.pdf

Stuart.jpg

The ‘Presentation’ folder acts as a prefix to identify the object and this pathname is known as the object key. Similarly with the ‘Project’ folder, again this is the prefix to the object. ‘Stuart.jpg’ does not have a prefix and so can be found within the root of the bucket itself.

Learn how to create your first Amazon S3 bucket in this Hands-on Lab.

4. Integration of Amazon CloudFront

Another method used to help optimization, which is by design, is to incorporate Amazon S3 with Amazon CloudFront. This works particularly well if the main request to your S3 data is a GET request. Amazon CloudFront is AWS’s content delivery network that speeds up the distribution of your static and dynamic content through its worldwide network of edge locations.

Normally when a user requests content from S3 (GET request), the request is routed to the S3 service and corresponding servers to return that content. However, if you’re using CloudFront in front of S3 then CloudFront can cache commonly requested objects. Therefore the GET request from the user is then routed to the closest edge location which provides the lowest latency to deliver the best performance and return the cached object. This also helps to reduce your AWS S3 costs by reducing the number of GET requests to your buckets.]

This post has explained a number of different options that are available to help you identify ways to optimize the performance when working with S3 objects.

For further information on some of the topics mentioned in this post please take a look at our library content.

- COURSE: Learn how to work with Amazon CloudFront

- HANDS-ON LAB: Learn how to configure a static website with S3 and CloudFront